How to Build Production-Grade Data Architecture on Google Cloud (Without Breaking the Bank)

By Tobi Williams Babatunde

In 2017 when I started learning Power BI and building data products, I was absolutely glued on Power Query - a self-service tool for data preparation and data transformation available with Excel and Power BI. Why? It was simple, seamless, and sublime - it was logic, modelling, and automation. I felt like (I still do) I could achieve anything I set my mind to accomplish.

Indeed my reluctance to learn how to use any other data tool or language like SQL was as a result of my prowess at using Power Query. I felt limitless. While I was adept at data modelling and creating relationships between tables (1 - 1, 1 - many, etc), I lacked the foundations of building scalable databases and data architecture that could power cloud native systems and business intelligence applications.

It has been many years since then - I've grown in humility, technical depth and product management prowess, and my understanding of building end-to-end data-hungry applications has deepened.

What I will be sharing in this exposition is not only about writing clean SQL or running python and automations using severless architecture. It's fundamentally about designing a scalable, version-controlled approach to data analytics architecture that can seamlessly power applications beyond business dashboards that serve thousands of users. It's a framework for production-grade Data Analytics Engineering and Real-Time Analytics in Google Cloud.

Introduction

In many ways, the skills gained from mastering Power BI and Power Query for data transformation are what trickled down to building better data models with SQL and automating business data workflows. Years ago, I spent endless hours on Chris Webb's blog and Guy in a Cube learning how to use Power Query better but these days, generative AI like ChatGPT, Gemini and Claude do the job for you with clear prompts in natural language. I will cover how some of my workflows with AI for data problem-solving in another post.

What I'm driving at here is simple - transferability. Data skills are easily transferrable from one tool to another, ergo, don't feel stuck or obligated to using only one tool for everything - forever. A tool is merely a means to an end.

In 2022 when we made the move to build an integrated learning platform and associated products from the ground up, I was not prepared for the scale of reiteration and feature refinements involved in order to find product-market fit and build a world-class product.

Our data sources cut across:

- Google Sheets,

- MongoDB,

- Web Application which stores document data in Firebase Firestore,

- Zoom,

- Google Analytics, and Sendgrid.

It includes everything from user signup and onboarding data, platform usage, video watch and lesson completion activities, learning methodology and schemas, quiz interactions and scores, student attendance in live, mentor sessions, user experience points (for gamification initiatives), and manually maintained bootcamp roadmaps.

We wanted to build for speed and scale at low-cost — we did.

As a wise man once said,

Begin with an end in mind

I wish I could tell you that I planned everything out in the beginning but the reality of product development is that you first begin with what works before ramping up on the form and structure.

I knew one thing though — BigQuery would be our data warehouse of choice. I had used it shortly after working for a German Edtech startup that had their tech stack relying on AWS and data warehouse on Redshift.

At the time, the structure the devs had built with to transfer Google Sheets data into Redshift seemed so immaculate, so awe-inspiring for Power BI/Tableau developers like me. Yes, I had switched to building with Tableau — it's a breath of fresh air and unlimited possibilities designing modern visual experiences with the language of data visualisation.

Plus, my AWS registration was being delayed compared to Google Cloud's BigQuery which was instant - they only required my gmail address to begin. I continued with BigQuery and never looked back. I followed a modular approach to building our data architecture and its associated products.

The Framework Overview - Data Architecture on Google Cloud

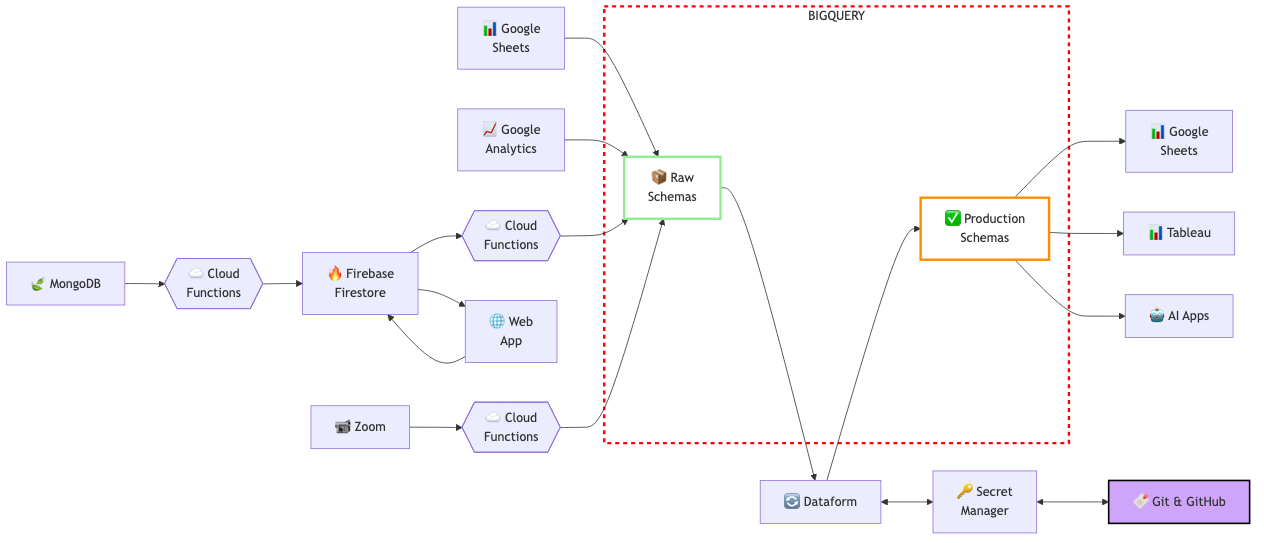

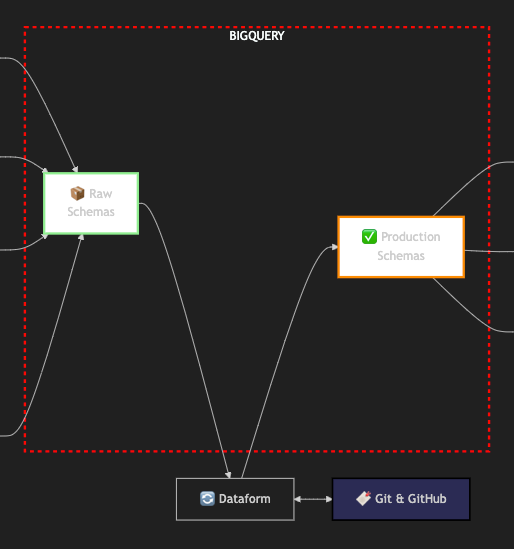

High-level Architecture Diagram

As I mentioned, what I will cover largely is the methodology behind building end-to-end data pipelines with an architecture that is ripe for powering software applications and business intelligence applications.

On a high-level, this diagram covers the flow of data including the tools and cloud resources employed to achieve the overall goal of designing scalable data architecture.

Core Principles and Design Decisions

From the architecture diagram above, the data flows from the left to right with the final consumers at the far right — business intelligence tools such as Tableau and Power BI, and Google Sheets.

In this section, I will discuss some of the rationality behind the data architecture design decisions. Before that, let me explain that Resagratia is a technical skills training platform and a trusted hiring partner for startups, small businesses, and consulting companies needing tech resources.

Primary Data Sources - Web App and Google Analytics

With that foundation, it's easy to see that there will be a learning management system (LMS) powering the skills training platform. We settled with using React to build the web app frontend user interface powered by NodeJS for backend development.

There are so many advantages of this approach including open-source, cross platform, fast processing, high performance and multitasking.

The web app makes use of backend NoSQL resources like MongoDB and Firebase Firestore for document and data storage. Indeed, we opted to build with the Firebase ecosystem for ease and interoperability with many Google Cloud resources.

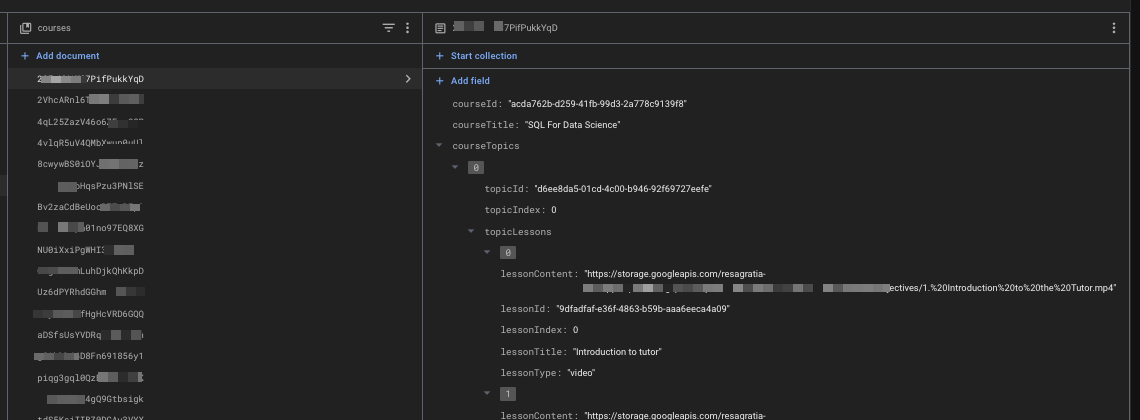

In terms of data touch points, the web app generates and stores data on user signup, user attributes like firstname and how_did_you_hear_about_us etc, quiz attempts and completions, video watch activities and literally data on any feature we want in cloud Firestore. Firestore can then be used as a backend resource for APIs used by the web app or other applications by using Firestore client libraries or REST API.

[Snapshot from a Firestore collection containing multiple documents. This is similar to a relational database storing multiple tables.]

TLDR: web app generates and stores data in Firestore. Firestore powers APIs used by features within the web app.

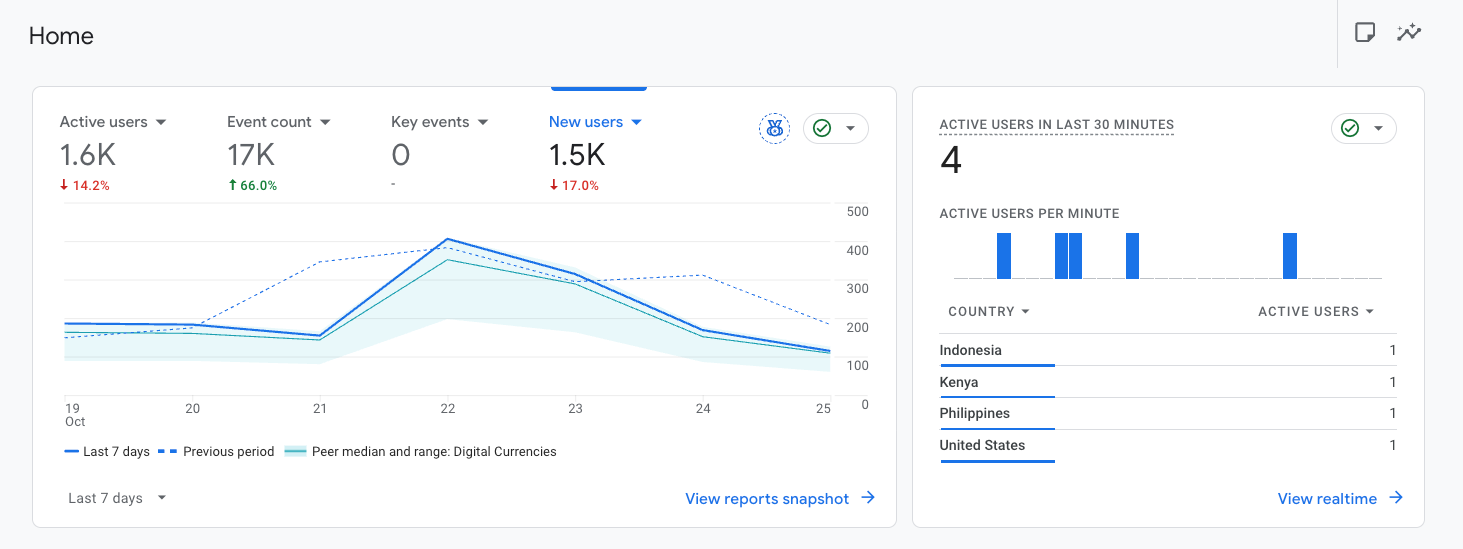

Another primary data source is Google Analytics which collects data on events and actions performed by users on the web app. It includes data on pages visited, actions taken, time spent on screen, source or medium through which user landed on the page, device used, etc. With Google Analytics 4 (GA4), you can integrate data from both mobile and desktop applications and manage those data points neatly.

[Snapshot from a Google Analytics home screen showing active users in the last 7 days and active users in the last 30 minutes (realtime)]

How is Firestore data collection made available in BigQuery?

In BigQuery, a schema of tables is known as a dataset. I created datasets for each data source to store initial raw data.

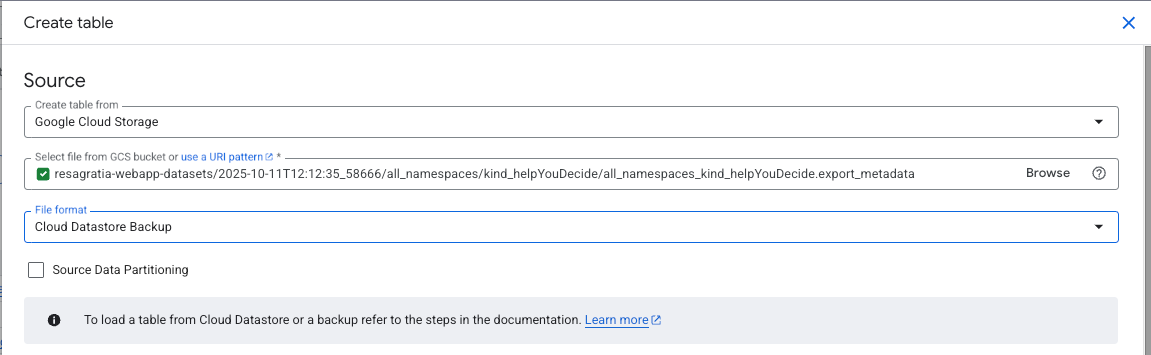

For Firestore, I initially opted for a manual exports via the managed service discussed in this guide. This process essentially creates a backup of your data in Google Cloud Storage — an object storage and data lake.

Then from the raw schema in BigQuery, I create a table using a Cloud Datastore backup as the input source.

[Snapshot of BigQuery table creation from Google Cloud Storage]

This approach is solid with a backup of data in case of any accidental deletion or resource failures. However, it's a two-step process that doesn't scale with increasing need for speed and agility.

The next stage was to schedule exports using the approach described here. This ensures that selected Firestore collections are transferred into Cloud Storage using Cloud Functions and Cloud Scheduler periodically. This schedule was set to weekly and subsequently to daily.

This is a good time to touch on Cloud Functions - a serverless framework that lets you automatically run backend code in response to events triggered by background events. Simply put, you write the code in Node.js or Python and deploy using functions (similar to AWS Lambda) without having to worry about setting up servers and clusters. Your code will run in the cloud — in your absence and at the cadence at which you set or following the event you set to trigger it.

Okay, so now the data flows automatically every day into Cloud Storage, but how does the data update in BigQuery? You guessed it — deploy another function that periodically moves the data from Cloud Storage into BigQuery. That approach introduces another moving part, although automated, that could slow down real-time analytics.

The most straightforward approach I settled with is to stream Firestore directly into BigQuery raw schema. This method uses a Firebase extension to export documents into BigQuery whenever there's an update (create, update, or delete operations) to the collection of documents. You can then run queries against the mirrored data in BigQuery. As you must have guessed, the extension essentially creates a cloud function to flow the data whenever there's an update. Pretty neat!

An important note here is that you must run the import script over the entire collection after installing the extension; it ensures that previously existing data is backfilled and that writes to your Firestore collection during the import are accurately exported to the Bigquery dataset.

That was a lot to condense but it was essential to understand the reasoning behind the data architecture design for different, evolving stages of needs.

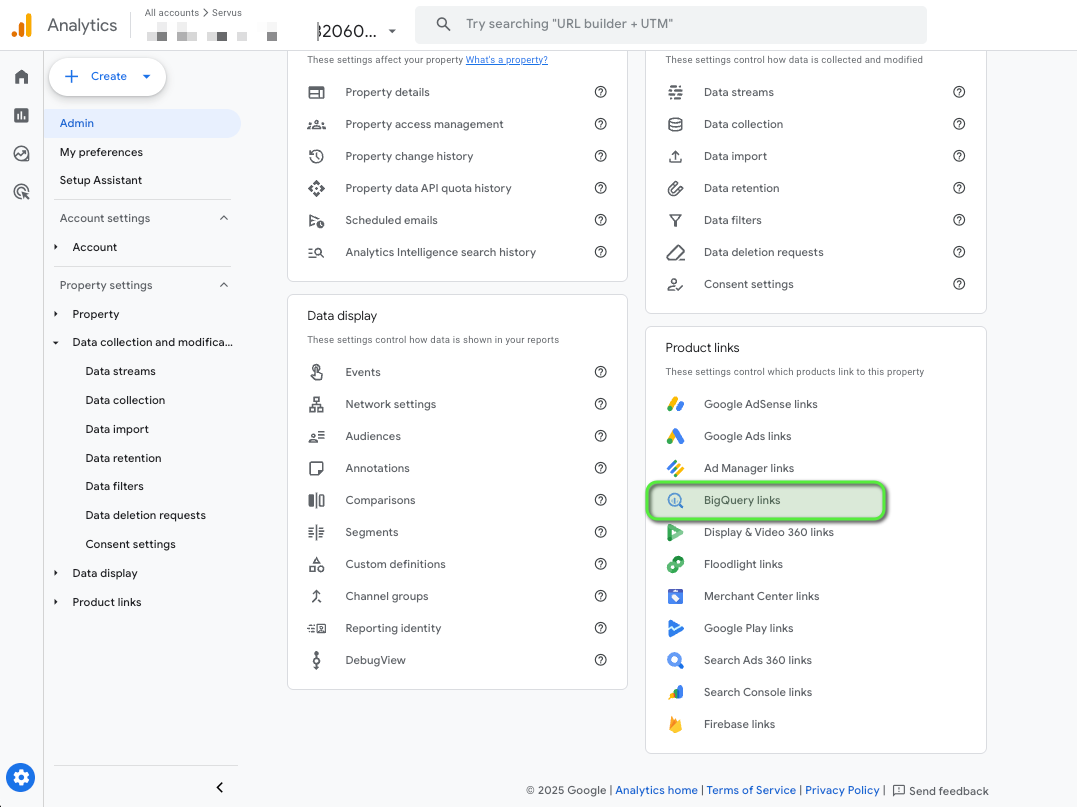

Extract and Load Google Analytics 4 (GA4) data into BigQuery

A lot of data-driven marketing teams are increasingly linking their GA4 property to BigQuery. It provides granular level analysis of events data that can be used for customised reporting and to power other applications. In my use case, google analytics data was combined with data generated in-app to design gamified learning features involving leaderboards, experience points, and usage analytics.

The approach is straightforward — set up BigQuery export from within the Google Analytics 4 admin interface. Select a raw dataset schema within BigQuery where the data should be stored and data should start flowing within 24 hours once the linkage is complete. You can select either or both a Daily (once a day) or Streaming (continuous) export of data. Usually, once a day is good enough for most applications. If you enable daily export, then 1 file will be exported each day that contains the previous day’s data.

[Snapshot of BigQuery Export Link from Google Analytics 4]

A key thing to note here is that GA4 data tends to be notoriously delayed by a couple of hours, sometimes up to 48 hours. For instance, at 8 am, you will see some data for the previous day but it may not be until later in the day when all events from the previous day are populated.

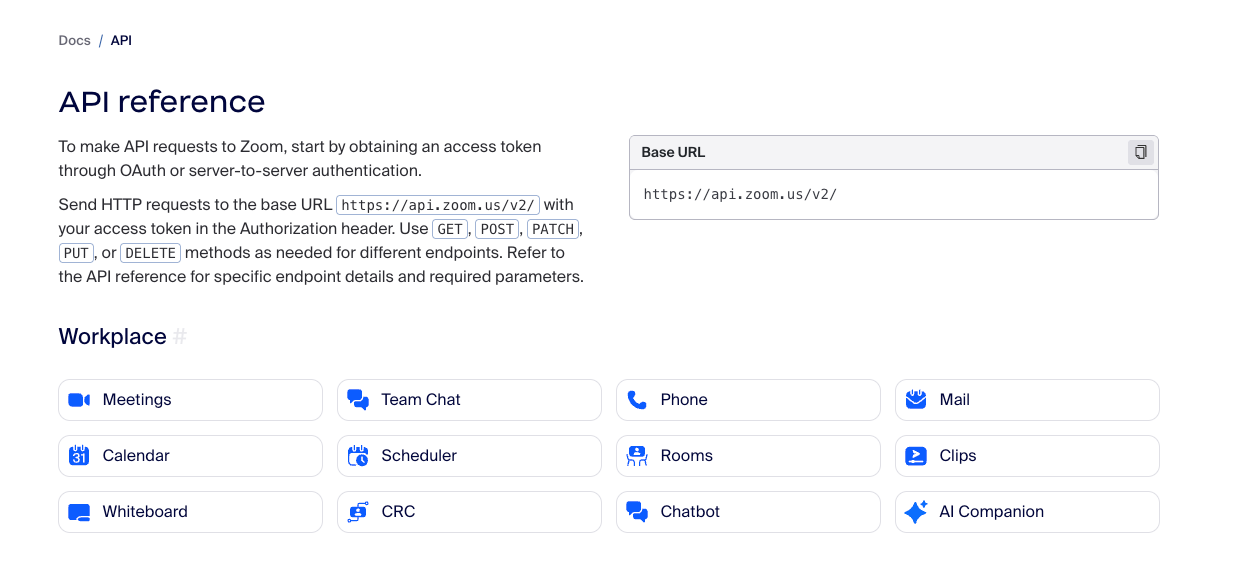

Secondary Data Sources - Zoom and Google Sheets

Every weekend, our students join industry mentors in live sessions held on Zoom. Zoom provides the software to host these sessions online along with an API to access records of participants, their duration in those meetings, and meeting recordings.

These datasets are important to:

- gamify and automatically reward learners who join the live sessions and contribute with experience points.

- share meeting recordings to enable users better review the concepts covered during those sessions. For those who missed the sessions, although they wouldn't get the experience points for attendance, they would appreciate the consideration. But if I'm to take off the mask, I think the approach encourages a lackadaisical attitude towards learning. It's better to be present in the live sessions — that's when the real magic happens.

[Snapshot of the Zoom developers API reference home page.]

Some scripts were written in Python to flow data from the Zoom API into a BigQuery raw schema. After the meetings are held on Saturday, the job kicks off to automatically transfer that data into BigQuery when most people are asleep. I personally enjoyed building this workflow because it allowed us to extract the most value from the Zoom subscription without paying for additional software or hosting user data on another platform.

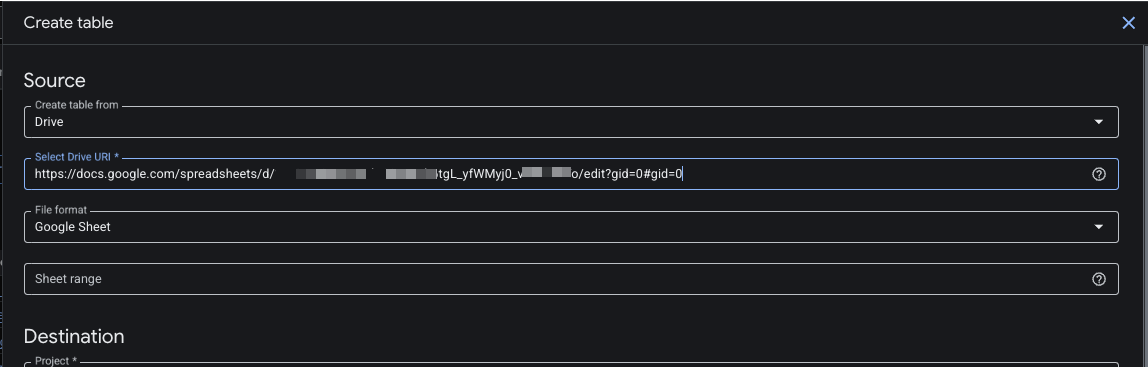

Finally, Google Sheets. You may ask why? Google Sheets is actually a very useful backend data source for quickly building MVPs and applications that don't require big data or sophisticated engineering. You can combine Google Sheets with Google Forms, App Script, and BigQuery to build very useful, functional applications and automations.

For our use case, Google Sheets was used to host data on bootcamp roadmaps, bootcamp calendar, and some manually collected data on student team presentation sessions. It allows us to quickly test ideas and concepts before burning through development resources.

Integrating Google Sheets into BigQuery is also straightforward because Google built this into the Create table interface. It's almost like every use case was well thought of.

Some notes on integrating Google Sheets into BigQuery:

- ensure that your sheet is formatted with well-labelled headers

- the Google Sheets data will initially be stored as an external table in BigQuery. It will not occupy physical space and any changes to Google Sheets will reflect instantly in BigQuery.

- the external table effect also limits the analysis and actions you can perform on that table until you manually create a table using the external table connection as source.

Instead of auto-detecting the schema, I usually edit as text to allow for precise control. For instance, if I have a Google Sheets with three columns that I want to import into BigQuery — user_id, firstname, and presentation_date — the schema will look like this:

[

{

"name": "user_id",

"type": "STRING",

"mode": "REQUIRED"

},

{

"name": "firstname",

"type": "STRING",

"mode": "NULLABLE"

},

{

"name": "presentation_date",

"type": "DATE",

"mode": "REQUIRED"

}

]In order to transform the Google Sheets external table in BigQuery into a native table, you can run a query like this:

CREATE OR REPLACE TABLE `google_sheets.student_presentations`

AS (

SELECT * FROM `google_sheets.student_presentations_raw`

)At this point, we're starting to write some SQL where previously, it was largely about Python, deploying cloud functions, and making design choices for streaming and exporting data from various sources.

So far I have covered how to get data from multiple sources into raw dataset schemas in BigQuery. Some might argue that such raw data should be stored in a lake like Cloud Storage (as a backup) before flowing (extracting and loading) into BigQuery, however, consider the cost and necessity of having that extensive design — not all datasources require such elaboration. For instance, our Google Analytics datasets do not require an intermediary lake for storage.

Data Transformation & Modelling - Dataform

Dataform is Google Cloud's native version of dbt, a data transformation and modelling tool. With Dataform, data engineers and data analysts can collaborate to build production-grade, scalable SQL pipelines using software engineering design principles such as version control, testing, environments and documentation.

[Snapshot of the BigQuery & Dataform section of the data architecture design]

Dataform is integrated directly within BigQuery studio so you don't have to leave the development environment. It's open source and it provides documentation for your tables and lineage graphs for every model that you build. Plus, it's a free service — tell me why I wouldn't bite? You'll only pay for the severless orchestration runs that update your tables.

[Snapshot of BigQuery studio components and tools]

Dataform comes in at a very important section in the data architecture to:

- combine data from various sources in the raw BigQuery schemas,

- clean, transform and enrich the data, and

- produce production-grade dimensionally modelled datasets with fact and dimension tables

Dimensional modeling is a data design technique used in data warehouses to organize data for analysis and reporting by separating business transactions and events into fact tables and contextual information into dimension tables.

After creating your Dataform repository, the next step is to create a development workspace (DW) which contains an editable copy of your team's repository. You can think of this workspace as where you can bring in your BigQuery datasources, write code, commit, and push commits to a remote Git repository without affecting any other team mates. For simplicity, I usually name my workspace the same as my Git repository.

Once the workspace is created, you need to initialize it by creating some essential files. At the time of writing this, I am using Dataform core version 3.0.0 and my workflow_settings.yaml file looks like this:

defaultProject: your-bigquery-project-id

defaultLocation: EU

defaultDataset: your-bigquery-datasetname

defaultAssertionDataset: dataform_assertions

dataformCoreVersion: 3.0.0The defaultProject is your BigQuery project id while the location can be modified to US or wherever you want the models to be hosted. The defaultDataset field requires, ideally, a fresh dataset schema created in BigQuery to house the production tables. In many workspaces, I've named this `analytics` but bear in mind that not all models will appear in this dataset.

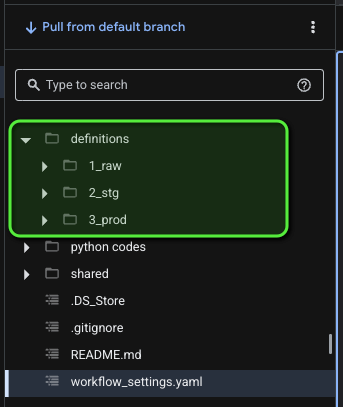

This is how I structure my development workspace folders and models:

[Snapshot of project setup within Dataform]

In the definitions folder, I create raw, staging, and production folders. These are important to maintain a logical and segmented flow of your data pipeline.

In the raw folder, all data sources from the raw schemas in BigQuery are brought in. Each raw table will be a represented as a single, configurable data source. It's important to highlight that SQLX is the primary tool used in Dataform — it is an open source extension of SQL that makes development faster, more reliable, and scalable. It includes functions including dependencies management, automated data quality testing, and data documentation.

For instance, to bring in the raw users table, I create a new SQLX file in the raw folder and input code in the following format:

config {

type: "table",

schema: "analytics_raw",

description: "all resagratia user signups exported from firestore"

}

SELECT

*

FROM `firestore_export.users` A typical SQLX file contains a configuration block where you can set the type of materialisation you want the model to take. In this case, I want my raw tables to be set as "table". This creates a backup in the "analytics_raw" dataset in BigQuery and ensures very fast load of tables in the raw folder. The "description" field is where you document and shed light on the datasource. This is really useful for transferability of work and improves clarity of code even in your absence.

Note: To properly create the models in the raw table, I manually created the "analytics_raw" dataset/schema in BigQuery where these tables will be materialised. My final production tables will be stored in the "analytics" schema. It just makes it easier to track and maintain as you scale workloads.

In the staging (stg) folder, I perform transformations, combine datasources, and enrich the datasets. The "type" field is set to "view" because these models do not need to be materialised as tables at this stage. It's also cheaper to use views when complex computations over large tables are involved.

config {

type: "view",

schema: "analytics_stg"

description: "This view transforms the firestore_export JSON data of quizzes taken by students"

}

WITH quiz_scores AS (

SELECT

JSON_VALUE(data, '$.userId') AS userId,

CAST(JSON_VALUE(quiz, '$.score') AS INT64) AS score,

JSON_VALUE(quiz, '$.topicId') AS topicId,

JSON_VALUE(quiz, '$.topic') AS topic,

JSON_VALUE(quiz, '$.course') AS course,

TIMESTAMP_ADD(

TIMESTAMP_SECONDS(CAST(JSON_VALUE(quiz, '$.timestamp.seconds') AS INT64)),

INTERVAL CAST(FLOOR(CAST(JSON_VALUE(quiz, '$.timestamp.nanoseconds') AS FLOAT64) / 1000) AS INT64) MICROSECOND

) AS timestamp

FROM

${ref('quiz_raw')},

UNNEST(JSON_EXTRACT_ARRAY(data, '$.quizDetails')) AS quiz

)

SELECT * FROM quiz_scoresAs you can see, in this example, I'm transforming the quiz scores which were streamed into BigQuery as JSON from Firestore. In the config block, the type is stored as "view" while schema is "analytics_stg". Of course, a dataset has already been created in BigQuery to store all the models in the Dataform staging folder.

There are more fields and options that you can customise in your config block. For instance, you can include the columns setting to document the meaning of each column.

config {

type: "view",

schema: "analytics_stg",

description: "This view transforms the firestore_export JSON data of quizzes taken by students",

columns: {

userId: "The user ID of the student who took the quiz",

score: "The score obtained by the student on the quiz",

topicId: "The ID of the quiz topic",

topic: "The name of the quiz topic",

course: "The course associated with the quiz",

timestamp: "The timestamp when the quiz was taken"

}

}You can also add more BigQuery specific settings like partitions and clustering.

config {

type: "incremental",

schema: "analytics",

description: "all google analytics events from the web app",

uniqueKey: ["event_id"],

bigquery: {

partitionBy: "DATE(event_timestamp)",

clusterBy: ["user_pseudo_id"]

}

}

SELECT

*

FROM ${ref('google_analytics_stg')}

-- include a look-back window: only reprocess last 3 days to catch late-arriving events

${ when(incremental(), `WHERE DATE(event_timestamp) >= DATE_SUB(CURRENT_DATE(), INTERVAL 3 DAY)`) }Here I've created the google analytics dataset as an incremental table, partitioned by the DATE of event_timestamp and clustered by the user_pseudo_id.

An incremental table is a table in a data warehouse that is updated by adding or changing only the new or modified data since the last update. This process is more efficient than a full table load, which replaces the entire dataset each time. This is particularly useful for the google analytics dataset that easily runs into gigabytes of data after collecting data for a couple of months.

Note also that tables within the staging and production folders are only referencing the raw models by creating a dependency using the `ref` function. This will help us build a cool lineage graph (shown in the following chapter). By using the `ref` function, the dependency tree complexity is abstracted away. It helps data engineers and analysts write shorter, reusable, and simpler queries instead of very long ones. This makes building data pipelines easier and more efficient.

And that's not all — Dataform comes prebuilt with the ability to define data quality tests, called assertions. You can define assertions directly in the config block and check for uniqueness, NULL values, or any custom row conditions you set.

assertions: {

uniqueKey: ["userId", "topicId", "timestamp"],

nonNull: ["score"],

rowConditions: [

"score <= 10"

]

}In our quiz model, I set an assertion to ensure the combination of userId, topicId, and timestamp is unique. If duplicates exist, the assertion fails. It also verifies that the score is never NULL and that all quiz scores fall between 0 and 10. The model must pass all three checks for the assertion to succeed. You can customize these checks to fit your own data quality needs.

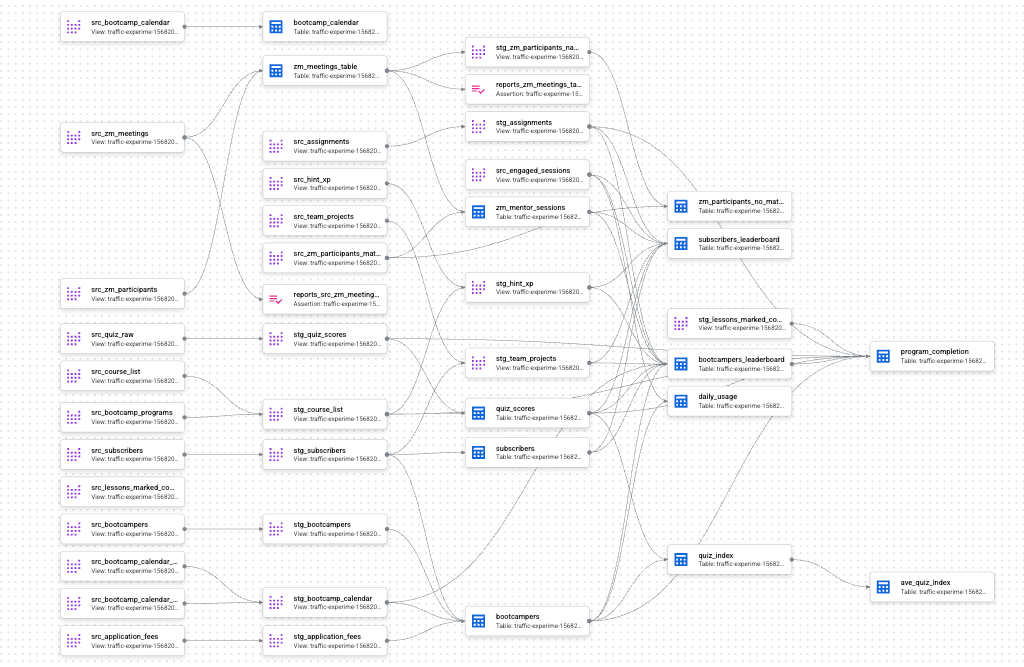

The final data models in the production (prod) folder are stored and materialised as tables. Remember the `ref` function? All the tables in prod have dependencies that are easily traced within the compiled graph (lineage chart). The downline tables have to be executed before they can referenced in the upline tables using the `ref` function. This is one of the coolest aspects of using a tool like Dataform — the ability to see how the production tables and data architecture all came together.

[Snapshot of Dataform compiled graph A.K.A. lineage graph]

In this compiled graph, data flows from the left hand side to the right hand side with the final production tables at the far right and materialised as tables in BigQuery. You can also see some assertions created on certain tables.

To close the chapter on Dataform (not that I'm bored), it's important to version control your code using Git and store that in a repository on GitHub for collaboration. Dataform seamlessly integrates with Git and this guide walks you through how to set up that link with a third-party Git provider like GitHub. In my case, I usually connect through HTTPS by generating a personal access token. The tokens serve as an alternative to using passwords for authentication to GitHub when using the GitHub API or the command line.

Once the personal access token for GitHub has been generated, you can store it in Google Cloud Secret Manager to avoid exposing it directly in the connection settings. This is general best practice for storing and exposing sensitive information and API keys within your code.

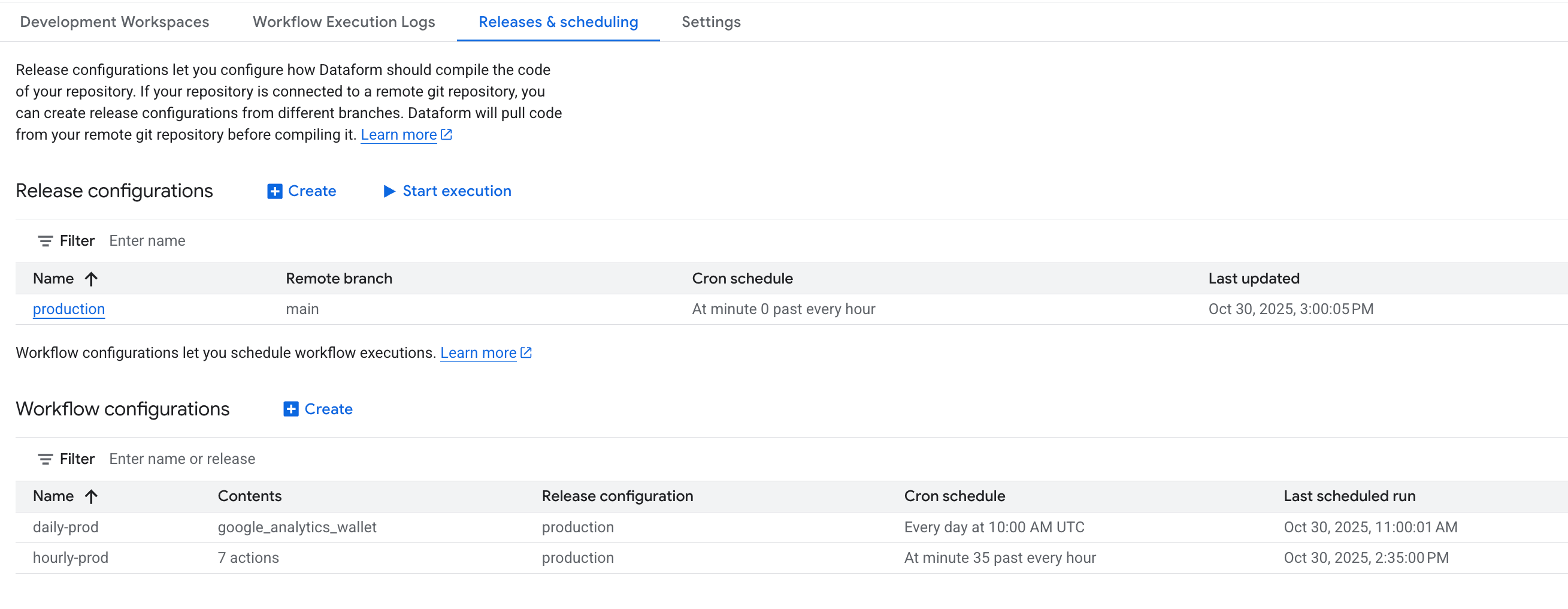

After connecting your remote Git repository using a provider like GitHub, the final bit is to set workflow runs within the "Releases & scheduling" section in your Dataform repository. Dataform will pull code from your remote git repository before compiling it. This is the "release configuration" aspect — a snapshot of the latest version of your codes. You can then set workflow runs, essentially CRON jobs, for selected production tables and at different frequencies.

[Snapshot of workflow runs and orchestration settings page in Dataform]

In my example above, I've set two workflow runs - one for daily runs of google analytics dataset and the other for hourly updates of more operational datasets.

The simplifed workflow looks like this:

- write SQLX code, create new data models, and enrich your data

- execute a run to materialise and store in BigQuery

- commit your code and include a clear commit message e.g. "refactor logic for quiz scores"

- push your committed code to your remote Git repository and create a Pull Request (PR)

- go to your GitHub repository — accept and merge the latest changes

- adjust workflow runs in Dataform if necessary

- monitor runs to ensure they're successful and set alerts in case of errors and failure

- repeat from the top and reiterate until you have a very robust architecture

And that's pretty much the entire flow in terms of building resilient, scalable and low cost, modern data architecture. A lot of adjectives — I know. I have found a lot of value in building these flows and pipelines over and over again and I am on the lookout for ways to continously improve. I believe a lot of organisations will also benefit from structuring their data pipelines in a similar fashion to reduce technical depth and speed up querying and accuracy in consumer analytics tools like Tableau and Power BI. This framework is also great for the development of AI applications and agentic workflows.

Real-World Results

Performance metrics (latency, throughput, cost)

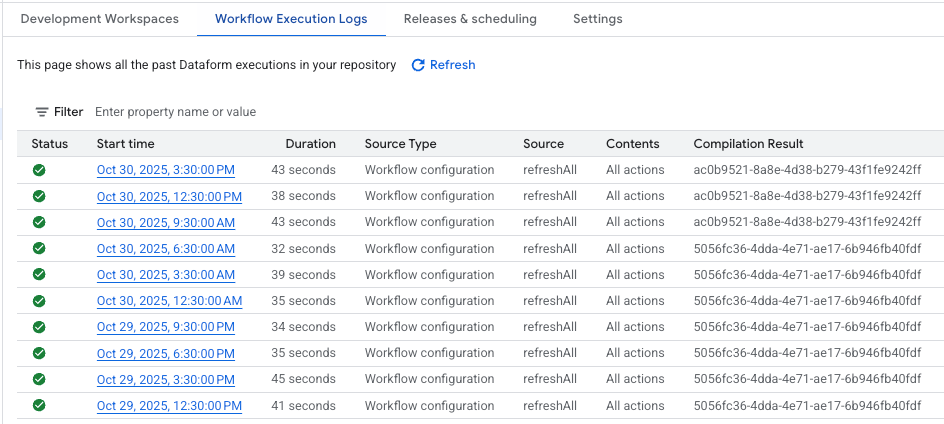

For the core engagement datasets, workflow runs take anywhere between 30 seconds and 45 seconds to complete. The initial run might take slightly longer to complete, however, subsequent runs tend to be faster due to caching and optimization. This snapshot captures all the data models in the production schema running into tens of GB of on-disk memory. You can say that's not bad at all.

[Snapshot of workflow execution logs in Dataform]

In terms of cost, according to Google, the Dataform service is free. This means that the bulk of the cost go to storage, querying, and workflow orchestration. For our use case, this is currently under $40 per month but it's variable based on the criteria shared earlier. It was under $10 - $15 in the early, good days.

With growing demands in storage and consumer analytics especially in business intelligence tools like Tableau, Google Sheets, or even AI applications, this is a very manageable cost and it fits what I set out to build initially: a scalable, resilient, low-cost data architecture.

What I would Have Done Differently

I had a lot of fun building my first Dataform project and felt excited each time I discovered new ways to improve the pipeline. If I could do it again, I would connect the Dataform repo to a remote Git repository earlier and focus more on mastering workflow orchestration from the start. It took me some time to fully understand release configuration and how to optimize workflow runs effectively.

I also got to know later that the same Dataform - GitHub repository can be used to host other python codes and improve collaboration.

Conclusion

Within a short space of time working with Dataform, I learnt how to design better data architecture and build high-performance data warehouses. I also learnt to integrate python scripts to run on severless architecture that deliver raw data into BigQuery and seamlessly integrate with the core data repository. Every serious modern business needs to have a similar set up — a scalable, version-controlled approach to data analytics architecture that can seamlessly power web and business applications used by hundreds of thousands of users.

If you'd like to discuss a project or have an interesting idea that you need to ship, feel free to reach out to me on LinkedIn.

- Introduction

- The Framework Overview - Data Architecture on Google Cloud

- High-level Architecture Diagram

- Core Principles and Design Decisions

- Primary Data Sources - Web App and Google Analytics

- How is Firestore data collection made available in BigQuery?

- Extract and Load Google Analytics 4 (GA4) data into BigQuery

- Secondary Data Sources - Zoom and Google Sheets

- Data Transformation & Modelling - Dataform

- Primary Data Sources - Web App and Google Analytics

- Real-World Results

- Performance metrics (latency, throughput, cost)

- What I would Have Done Differently

- Conclusion

Empowering individuals and businesses with the tools to harness data, drive innovation, and achieve excellence in a digital world.

2026Resagratia (a brand of Resa Data Solutions Ltd). All Rights Reserved.