Pandas for Data Analysis

By James Olayinka

Introduction

Pandas is a popular open-source data analysis and manipulation tool written in Python. It provides data structures for efficiently storing and manipulating large datasets, and functions for cleaning, transforming, and aggregating data.

In the previous series, I explored numpy as a popular library in Python for numerical computation and it essential use for data manipulation. In this tutorial, I'll start with the basics of Pandas and gradually move towards more advanced topics. Some of the concept that will be covered includes:

- Installation of Pandas library

- Importing the Pandas library

- Basic operations

- Viewing data

- Data information

- Descriptive statistics

- Data selection and filtering

- Data cleaning

- Data transformation

Let’s get started…

Installation of Pandas library

Before I get started, there is need to make sure that Pandas is installed. If you are using a Python distribution like Anaconda, Pandas should already be installed. If not, you can install it using pip:

pip install pandasImporting the Pandas library

Let’s start by importing Pandas into the integrated development environment (IDE) of your choice

import pandas as pdI will be leveraging the popular Titanic dataset in this tutorial, which contains information about the passengers who were aboard the Titanic when it sank.

Once you've downloaded the dataset, you can load it into a Pandas DataFrame like this:

df = pd.read_csv('titanic.csv')This will create a DataFrame object called df that contains the data from the CSV file.

Basic Operations

Let's start by looking at some basic operations that you can perform on a Pandas DataFrame.

Viewing Data

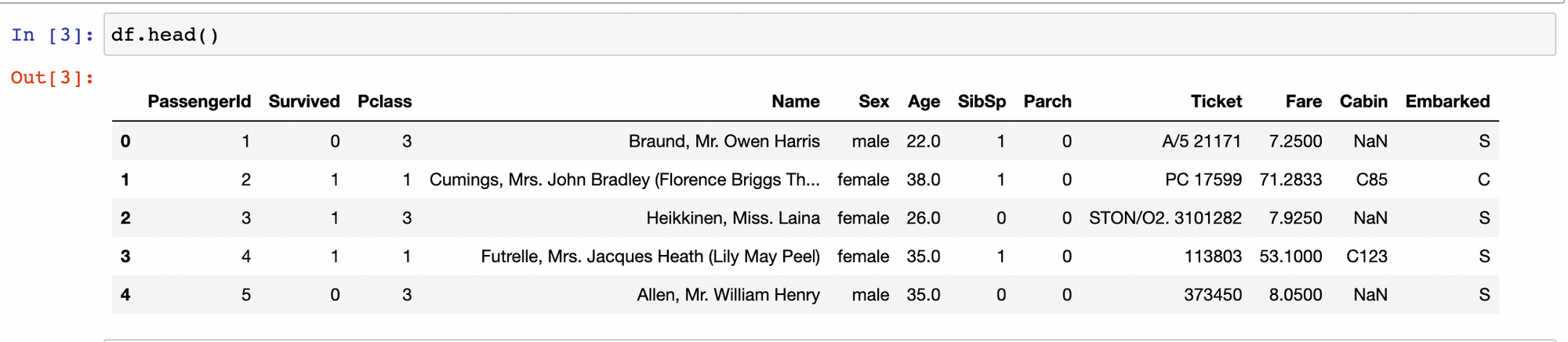

To view the first few rows of a DataFrame, you can use the head() function:

df.head()This will display the first five rows of the DataFrame.

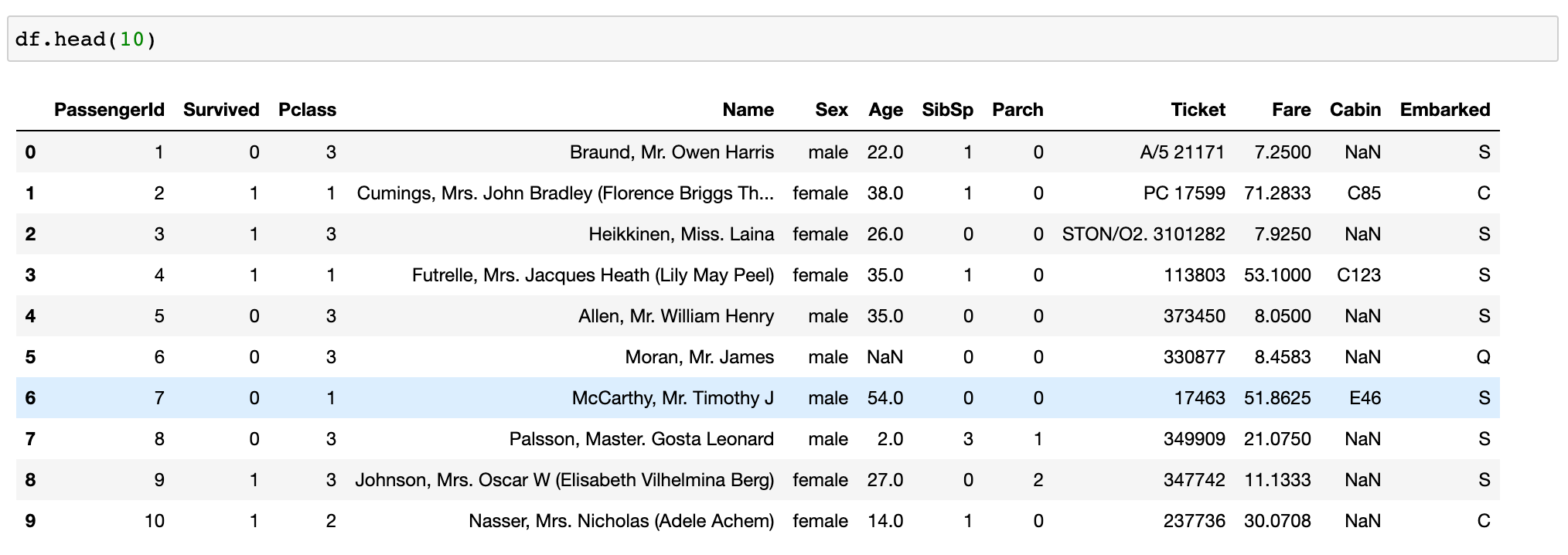

You can specify a different number of rows by passing an argument to the function:

df.head(10)This will display the first ten rows of the DataFrame.

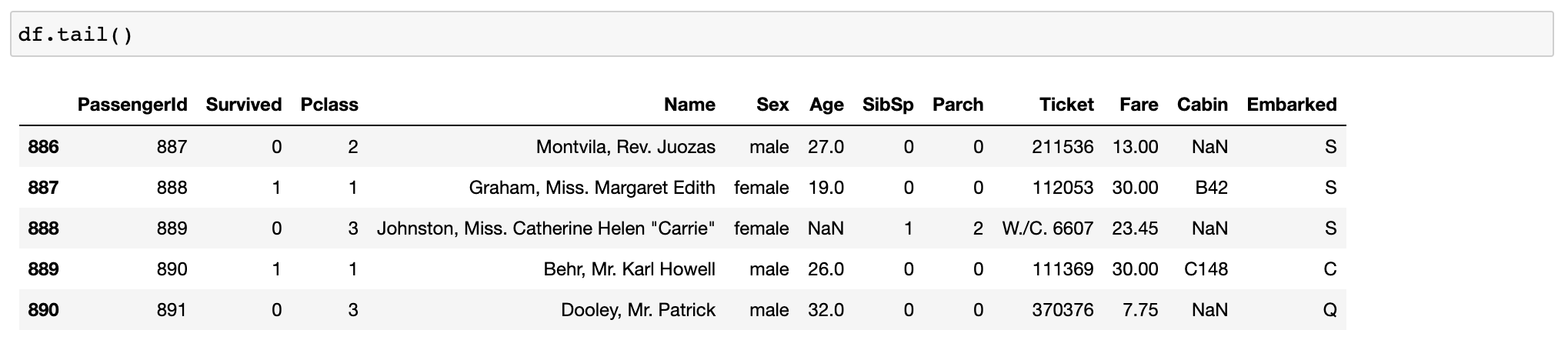

To view the last few rows of a DataFrame, you can use the tail() function:

df.tail()This will display the last five rows of the DataFrame.

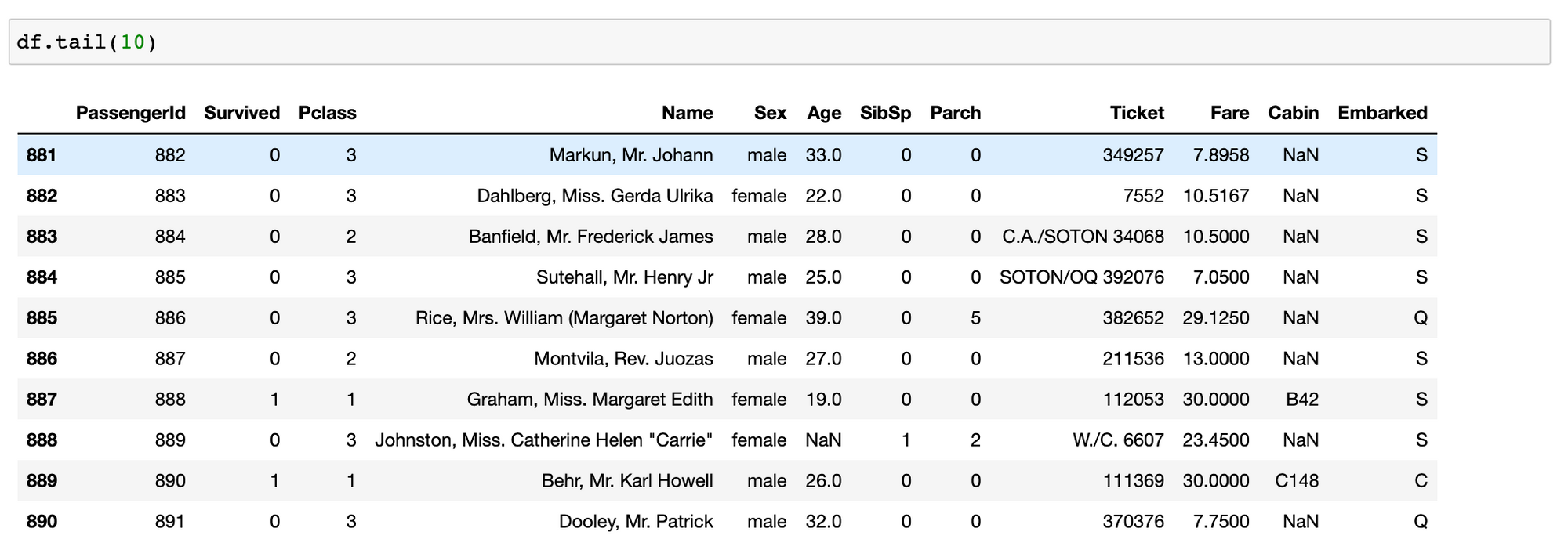

Again, you can specify a different number of rows by passing an argument to the function. I will be displaying the last 10 rows.

df.tail(10)This will display the last 10 rows in the dataset:

Data Information

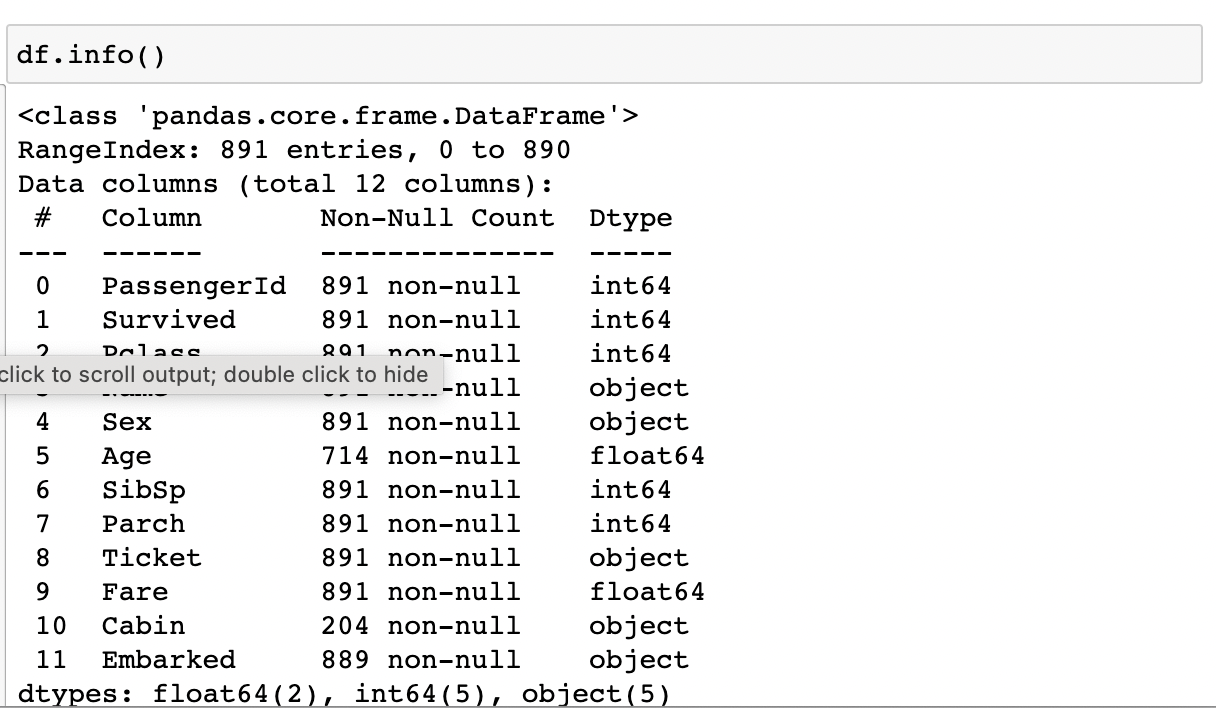

To get more information about a DataFrame, you can use the info() function:

df.info()This will display information about the DataFrame, such as the number of rows and columns, the data types of the columns, and the number of non-null values in each column.

Descriptive Statistics

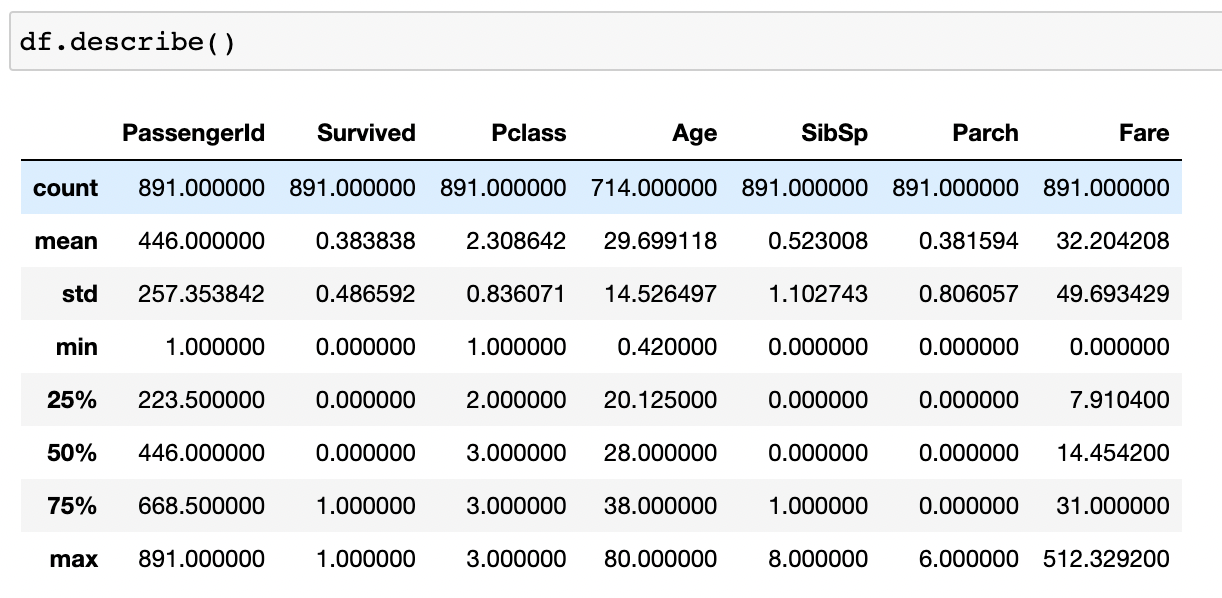

To get some basic descriptive statistics about a DataFrame, you can use the describe() function:

df.describe()This will display statistics such as the mean, standard deviation, minimum, and maximum values for each numeric column in the DataFrame. The result looks like this:

Data Selection and Filtering

One of the most important things you can do with a DataFrame is select and filter data.

Selecting Columns

To select a single column from a DataFrame, you can use the square bracket notation:

df['column_name']This will return a Series object containing the values from the specified column.

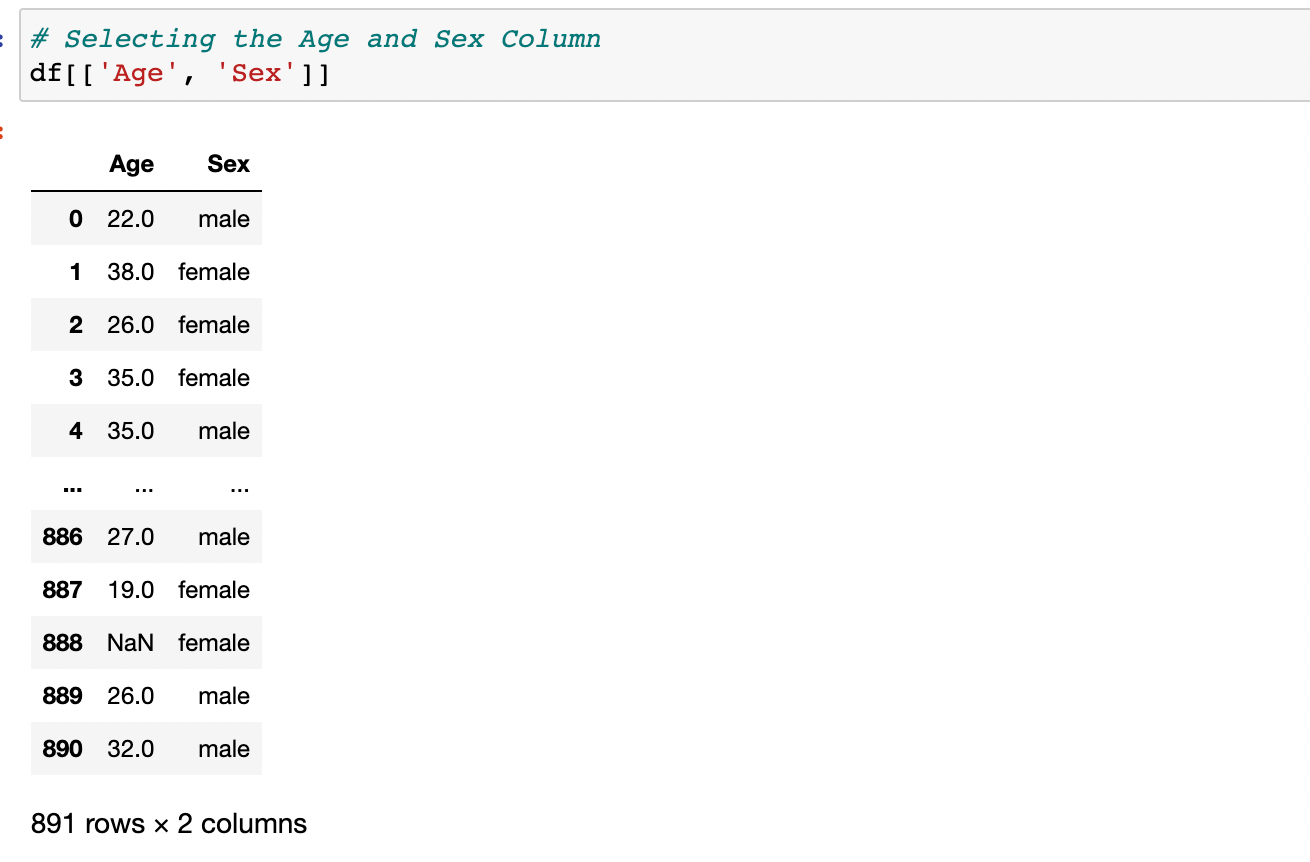

To select multiple columns, you can pass a list of column names to the square bracket notation:

df[['column_name_1', 'column_name_2']]Example: Selecting the Age and Sex in the Titanic dataset, this will return a DataFrame containing only the specified columns.

Filtering Rows

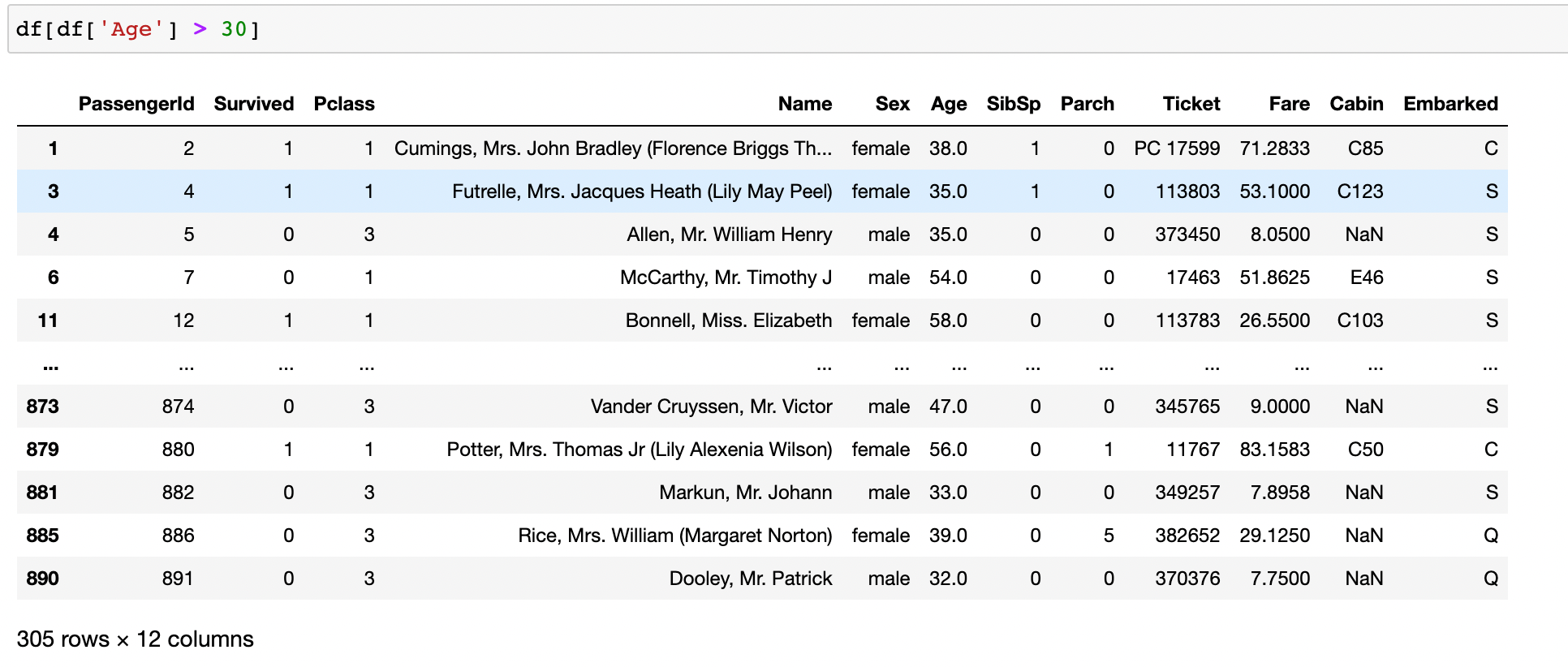

To filter rows based on a condition, you can use boolean indexing. For example, to select all rows where the "Age" column is greater than 30, you can do the following:

df[df['Age'] > 30]This will return a DataFrame containing only the rows where the condition is true. That is, where the Age of the passenger in the Ship is greater than 30

Note: The row of the dataset has been reduced from 891 to 305. This is because only the passengers that met the condition will be returned in the output.

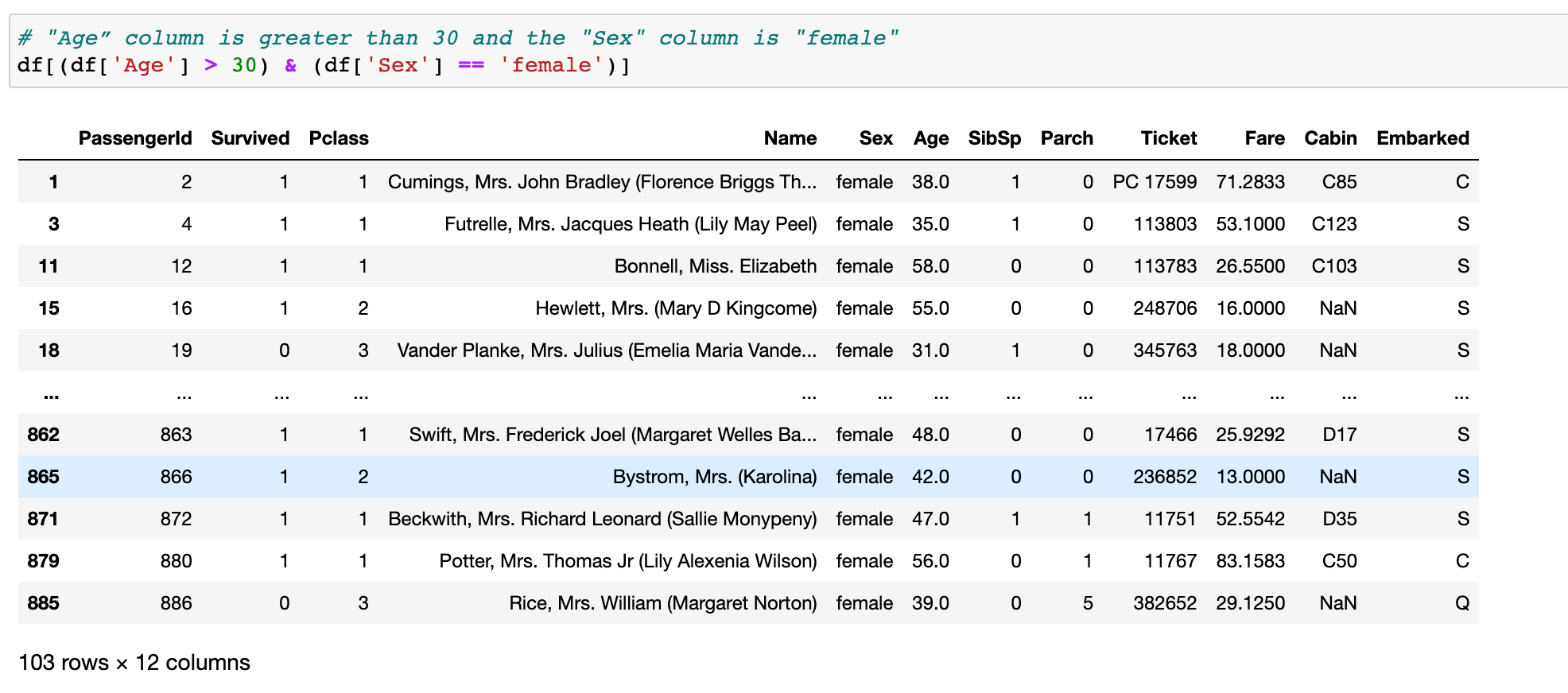

Combining Filters

You can combine multiple filters using the & (and) and | (or) operators.

For example, to select all rows where the "Age” column is greater than 30 and the "Sex" column is "female", you can do the following:

df[(df['Age'] > 30) & (df['Sex'] == 'female')]This will return a DataFrame containing only the rows where both conditions are true.

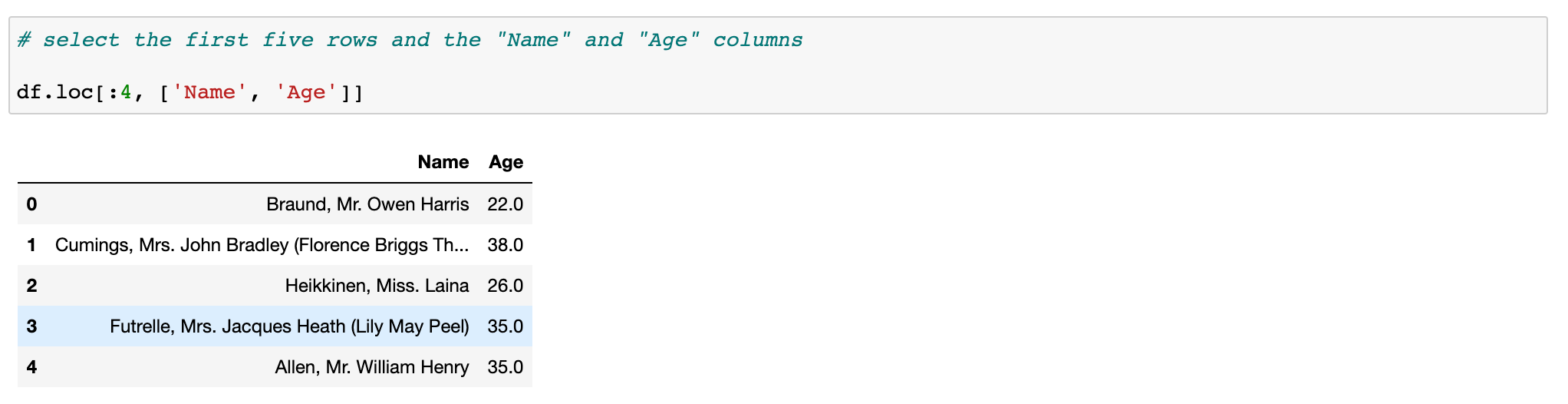

Selecting Rows and Columns

To select specific rows and columns from a DataFrame, you can use the loc and iloc attributes.

The loc attribute is used to select rows and columns by label.

For example, to select the first five rows and the "Name" and "Age" columns, you can do the following:

df.loc[:4, ['Name', 'Age']]This will return a DataFrame containing the first five rows of the "Name" and "Age" columns.

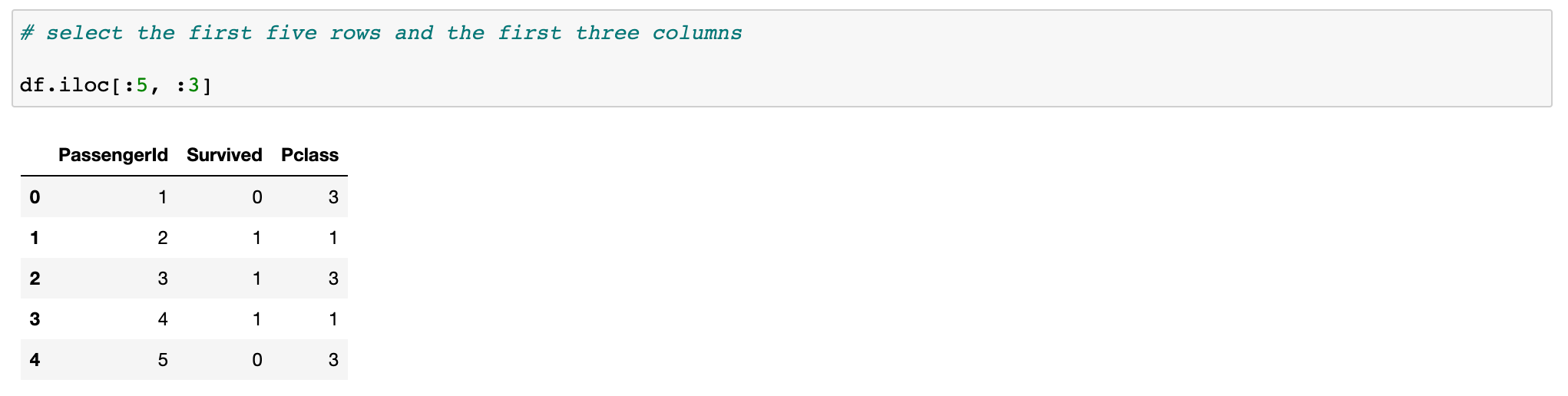

The iloc attribute is used to select rows and columns by integer position.

For example, to select the first five rows and the first three columns, you can do the following:

df.iloc[:5, :3]This will return a DataFrame containing the first five rows of the first three columns.

Data Cleaning

Data cleaning is an important step in any data analysis project. In Pandas, there are several functions that you can use to clean and transform data.

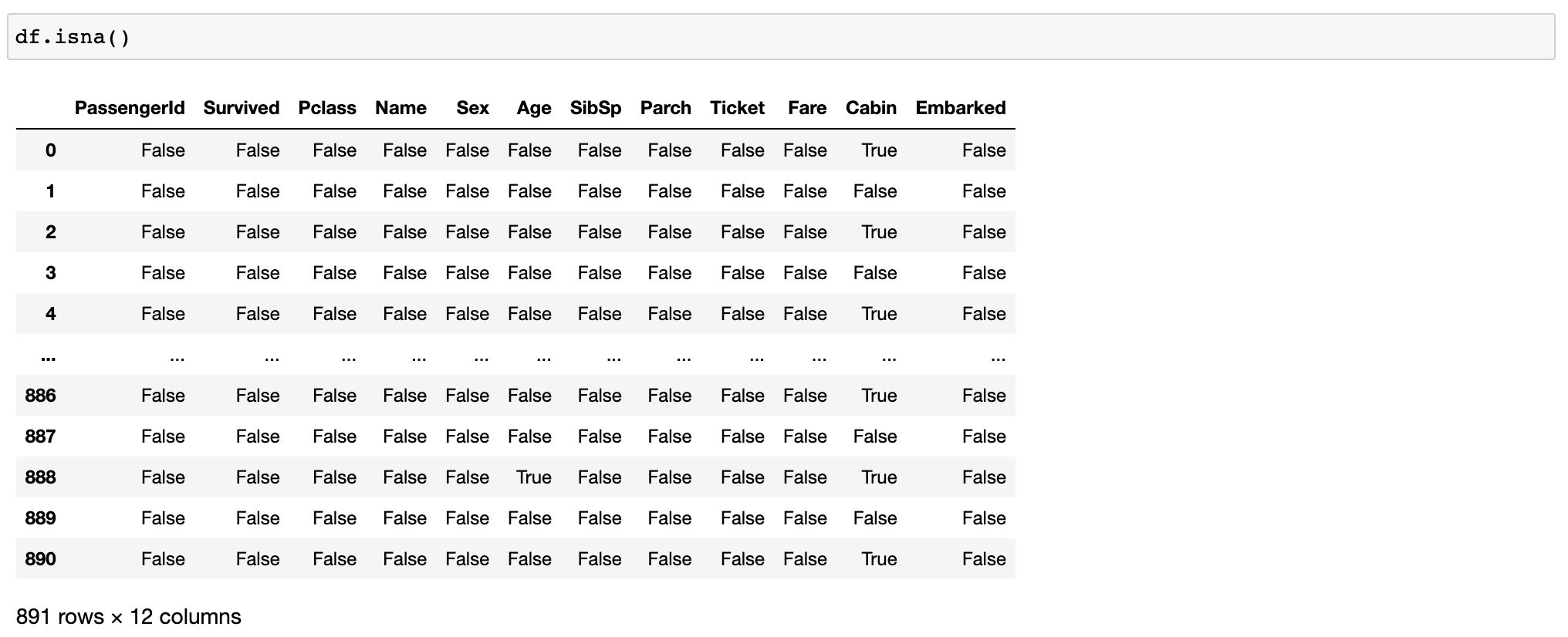

Handling Missing Values

Missing values are a common problem in real-world datasets. Pandas provides several functions for handling missing values, including isna(), fillna(), and dropna().

The isna() function is used to identify missing values in a DataFrame:

df.isna()This will return a DataFrame containing True for cells that contain missing values and False for cells that contain non-missing values.

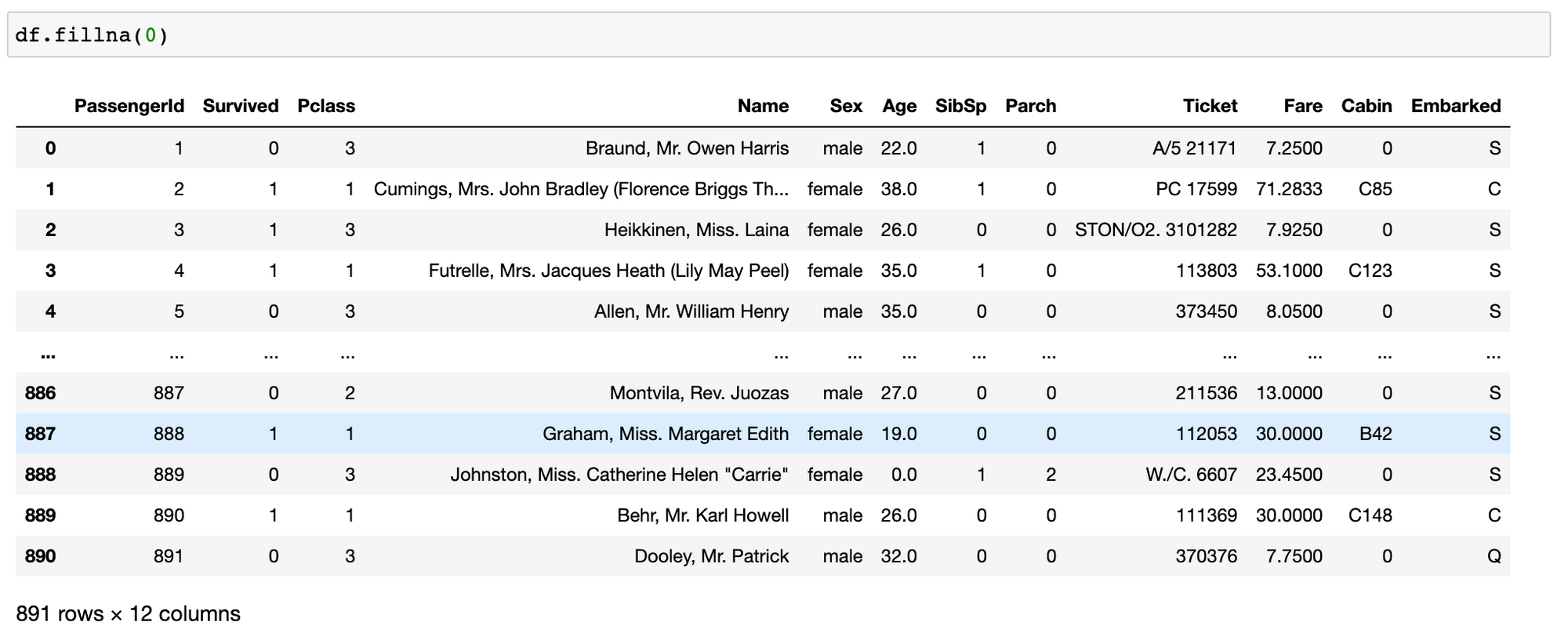

The fillna() function is used to replace missing values with a specified value:

df.fillna(0)This will replace all missing values in the DataFrame with the value 0.

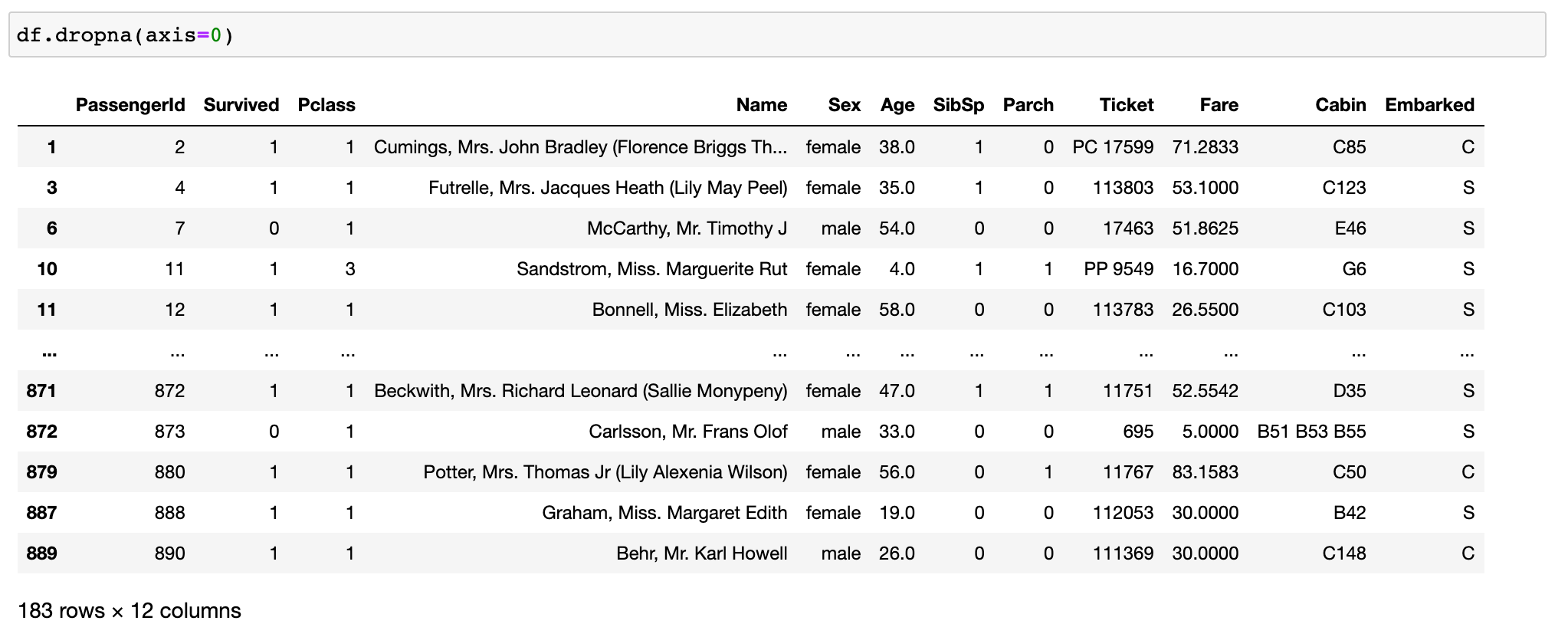

The dropna() function is used to remove rows or columns that contain missing values:

df.dropna(axis=0)This will remove all rows that contain at least one missing value.

Note: This has reduced the number of rows from 891 to 183 due to the missing values in each rows

Handling Duplicates

Duplicate rows can be a problem in some datasets. Pandas provides a duplicates() function that you can use to identify and remove duplicate rows.

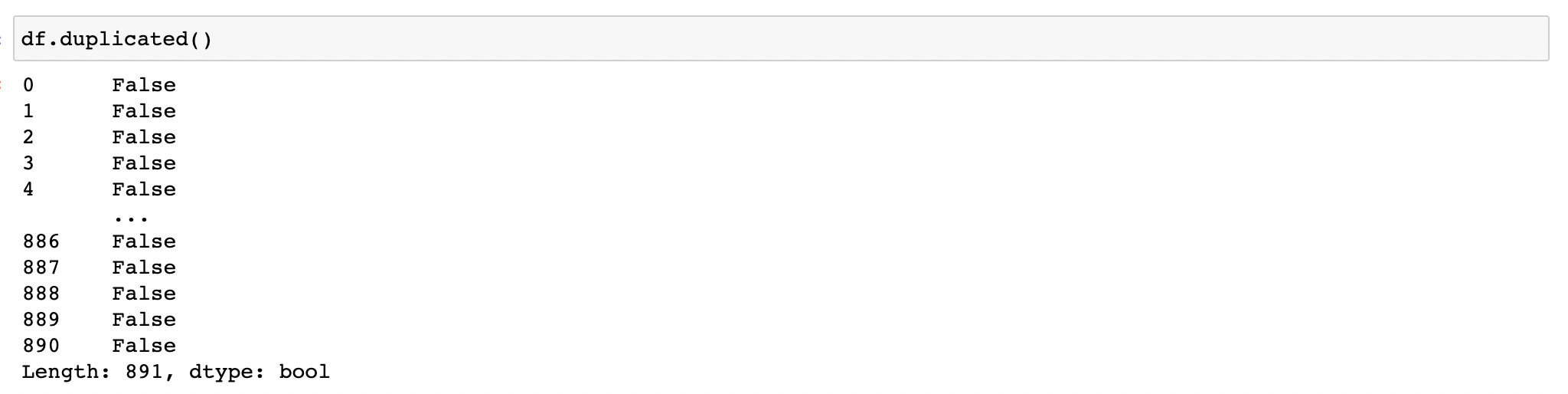

To identify duplicate rows, you can use the duplicated() function:

df.duplicated()This will return a DataFrame containing True for rows that are duplicates and False for rows that are not duplicates.

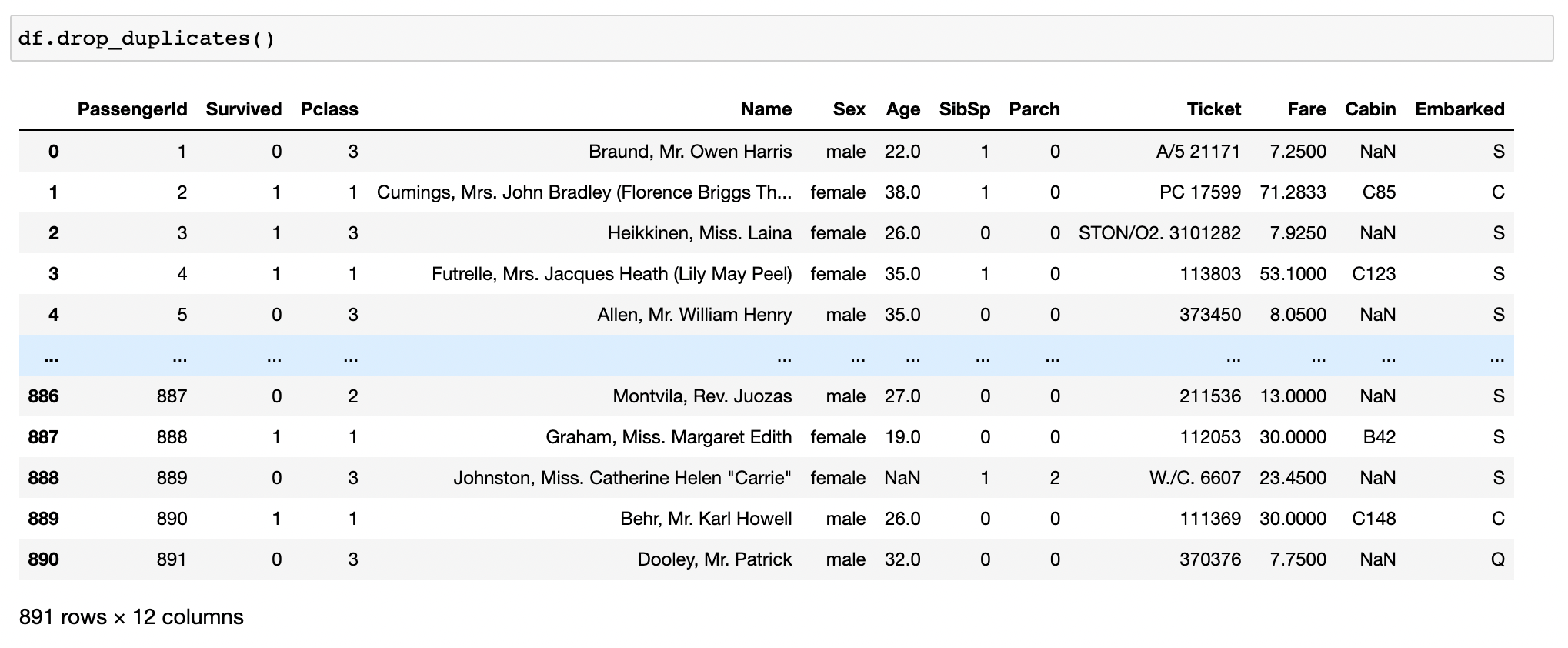

To remove duplicate rows, you can use the drop_duplicates() function:

df.drop_duplicates()This will remove all duplicate rows from the DataFrame.

Note: Since the number of rows still remains 891, this indicates that the data doesn’t contain duplicate rows

Data Transformation

In addition to cleaning data, Pandas provides several functions for transforming data.

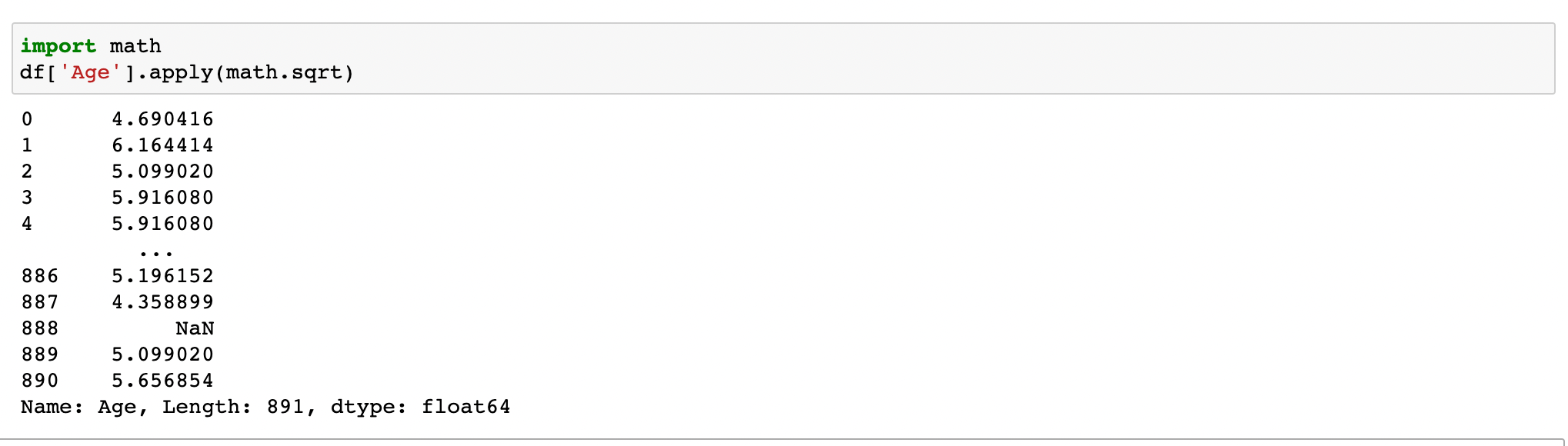

Applying Functions

One can apply a function to a DataFrame using the apply() function.

For example, to apply the sqrt() function to all values in the "Age" column, you can do the following:

import math

df['Age'].apply(math.sqrt)This will return a Series object containing the square root of each value in the "Age" column.

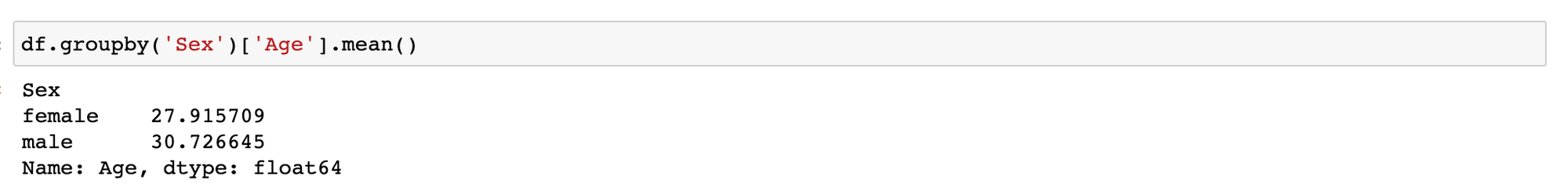

Grouping Data

One can group data in a DataFrame using the groupby() function.

For example, to group the data by the "Sex" column and calculate the mean value of the "Age" column for each group, you can do the following:

df.groupby('Sex')['Age'].mean()This will return a Series object containing the mean age for males and females.

Note: You can apply other descriptive metrics when using the groupby function - such as sum, count, median and so on based on the kind of insight you are trying to get from your data

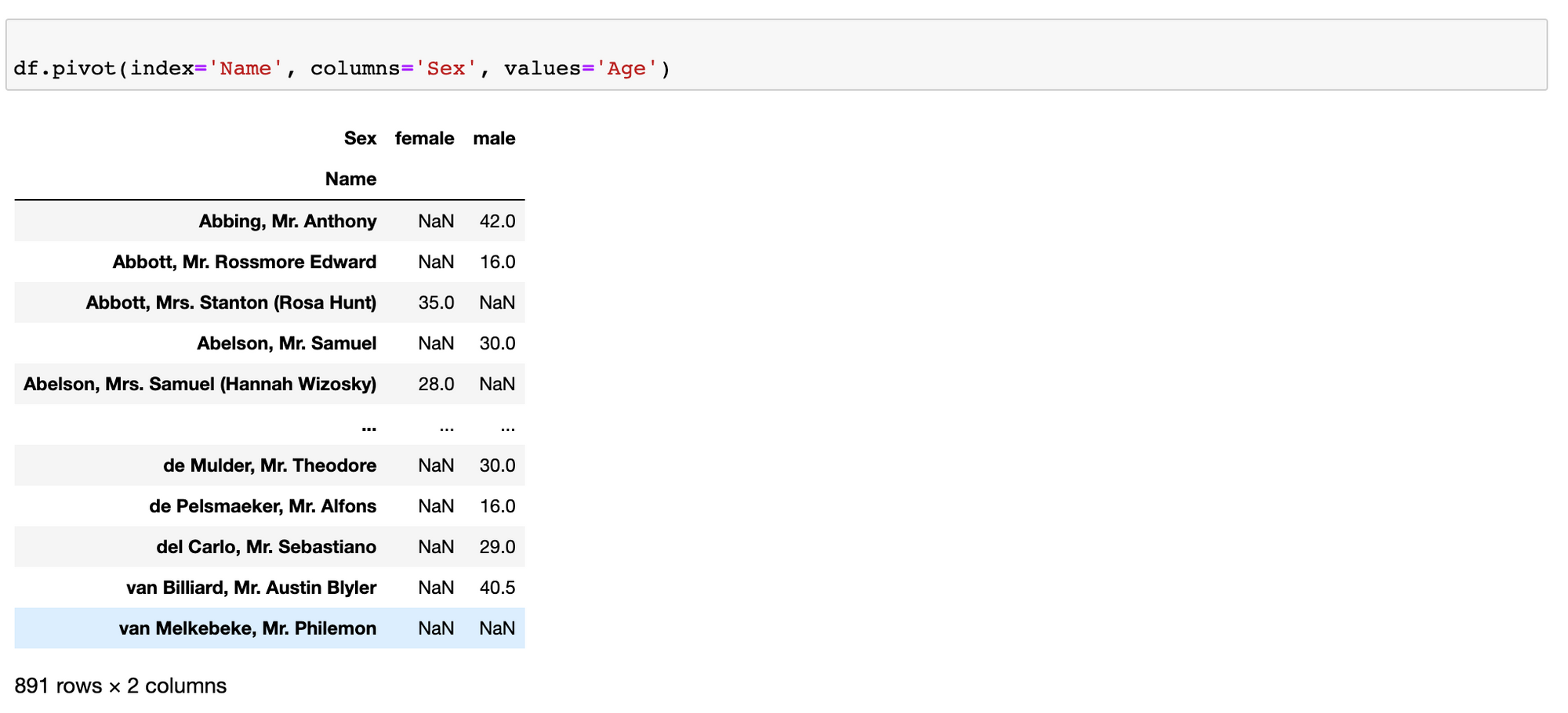

Pivoting Data

One can pivot a DataFrame using the pivot() function.

For example, to pivot the data so that the "Name" column becomes the index and the "Sex" column becomes the columns, with the values in the "Age" column as the values, you can do the following:

df.pivot(index='Name', columns='Sex', values='Age')This will return a DataFrame where each row represents a unique name and the columns represent the sex of the person, with the values being the corresponding ages.

Merging Data

One can merge two or more DataFrames using the merge() function.

For example, if you have two DataFrames, df1 and df2, with a common column "ID", you can merge them on this column as follows:

merged_df = pd.merge(df1, df2, on='ID')This will return a new DataFrame, merged_df, containing all the columns from df1 and df2, where the rows are matched on the common "ID" column.

Note: this is commonly done when using a test and train dataset in order to apply same transformation on the data. Also, done when collating data from diverse source especially when the data contains same features

Conclusion

In this article, I have covered the basics of Pandas, from reading and writing data to cleaning, transforming, and analyzing data. With these skills, you should be able to work with a wide range of datasets and perform various data analysis tasks using Pandas.

Keep in mind that this is just a brief introduction to Pandas, and there are many more advanced features and functions available that can help you with more complex data analysis tasks. To learn more about Pandas, be sure to check out the official documentation and explore more resources available online here.

If you want to get started with data analytics and looking to improving your skills, you can check out our Learning Track

- Introduction

- Installation of Pandas library

- Importing the Pandas library

- Basic Operations

- Viewing Data

- Data Information

- Descriptive Statistics

- Data Selection and Filtering

- Selecting Columns

- Filtering Rows

- Combining Filters

- Selecting Rows and Columns

- Data Cleaning

- Handling Missing Values

- Handling Duplicates

- Data Transformation

- Applying Functions

- Grouping Data

- Pivoting Data

- Merging Data

- Conclusion

Empowering individuals and businesses with the tools to harness data, drive innovation, and achieve excellence in a digital world.

2026Resagratia (a brand of Resa Data Solutions Ltd). All Rights Reserved.