Building an Oilfield Production Surveillance Dashboard

Admin

Introduction

Optimization of oil production using data and analytics involves using advanced technologies and algorithms to collect and analyze large amounts of data related to the extraction process. This can include data on drilling operations, well performance, reservoir characteristics, and environmental factors, among others.

By analyzing this data, companies can identify patterns, trends, and potential issues in the production process, which can be used to optimize the efficiency and effectiveness of the extraction process

Oilfield Production Surveillance (OPS) is a process of monitoring and analyzing the performance of an oilfield and its associated wells to optimize production and reduce costs. OPS is closely related to the optimization of oil production using data and analytics, as it involves the collection and analysis of large amounts of data related to the production process.

OPS typically involves the use of advanced technologies such as sensors, data loggers, and communication systems to collect real-time data on well performance, production rates, and other key factors. This data is then analyzed using advanced algorithms and machine learning techniques to identify patterns, trends, and potential issues in the production process.

In this data digest, I will be walking you through how to create an Oilfield Production Surveillance Dashboard using Google Cloud Platform (GCP). I will touch base on the following:

- Setting up a Google Cloud Platform (GCP)

- Parameters for each well in a producing oilfield

- Dashboard building process requirements and iterations

- Implementation of the project in Google Cloud Platform (GCP) and Python using Jupyter Notebook

Let’s get started…

If you prefer to watch the tutorial, here's a recording of the session with the instructor otherwise continue with the step-by-step tutorial.

Instructor: Oladayo Siyanbola

Setting up Google Cloud Platform (GCP)

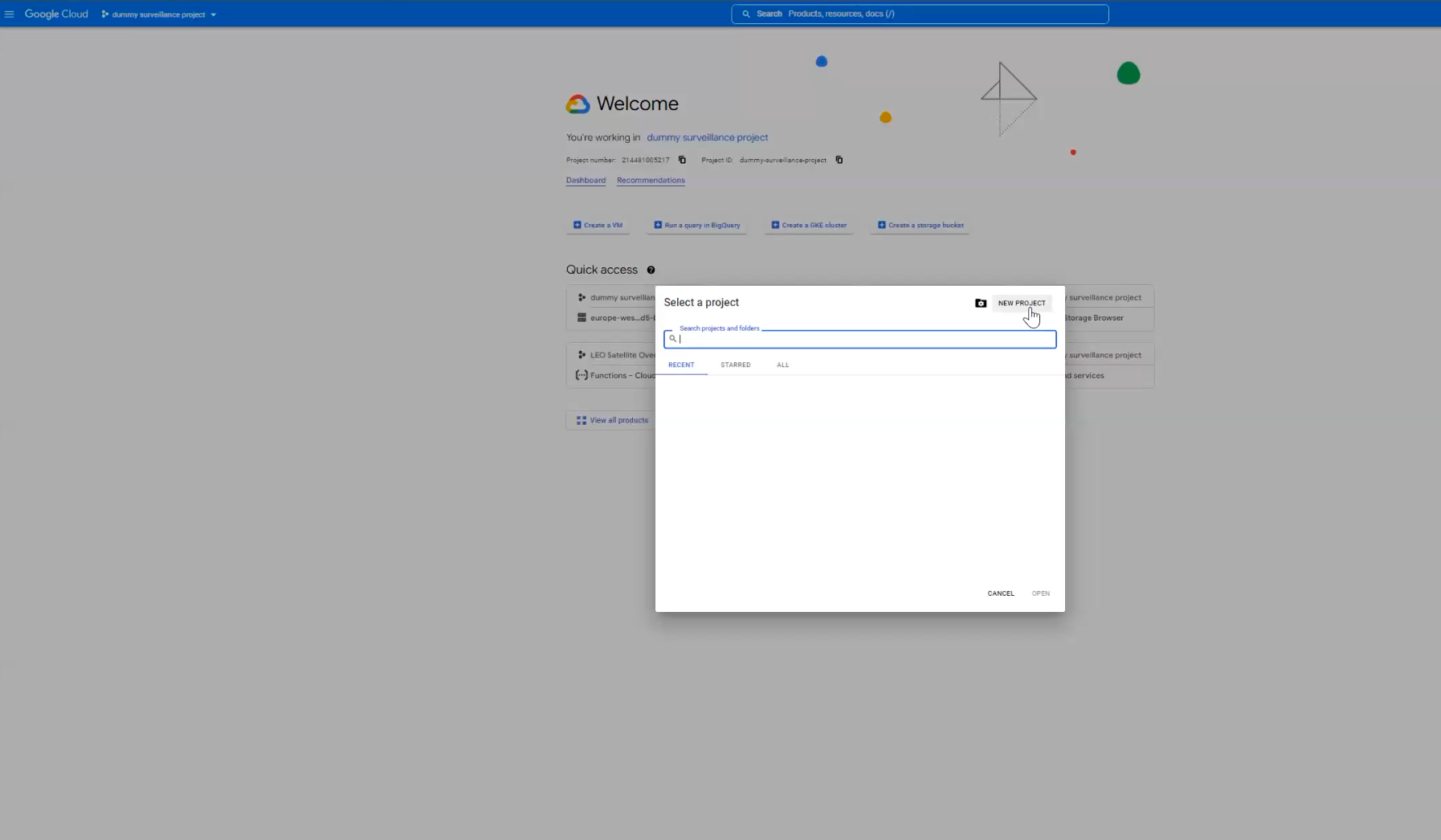

- First, start by creating the project in Google Cloud Platform (GCP) by clicking on new project

Note: You can access the Google Cloud Platform using the link

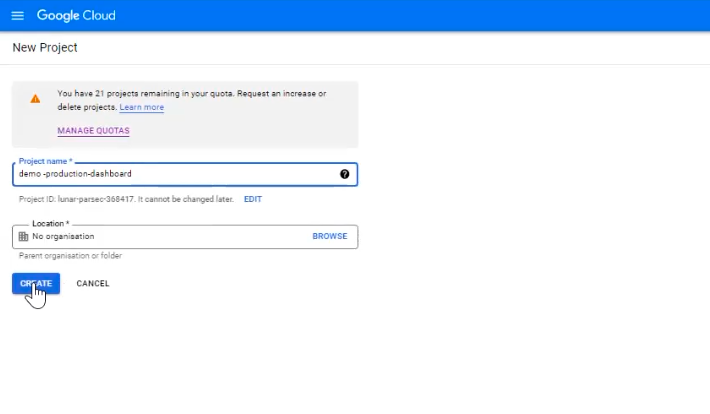

- Next, enter the name of the project and organization you want the project to be aligned to. I will call this project “demo-production-dashboard”

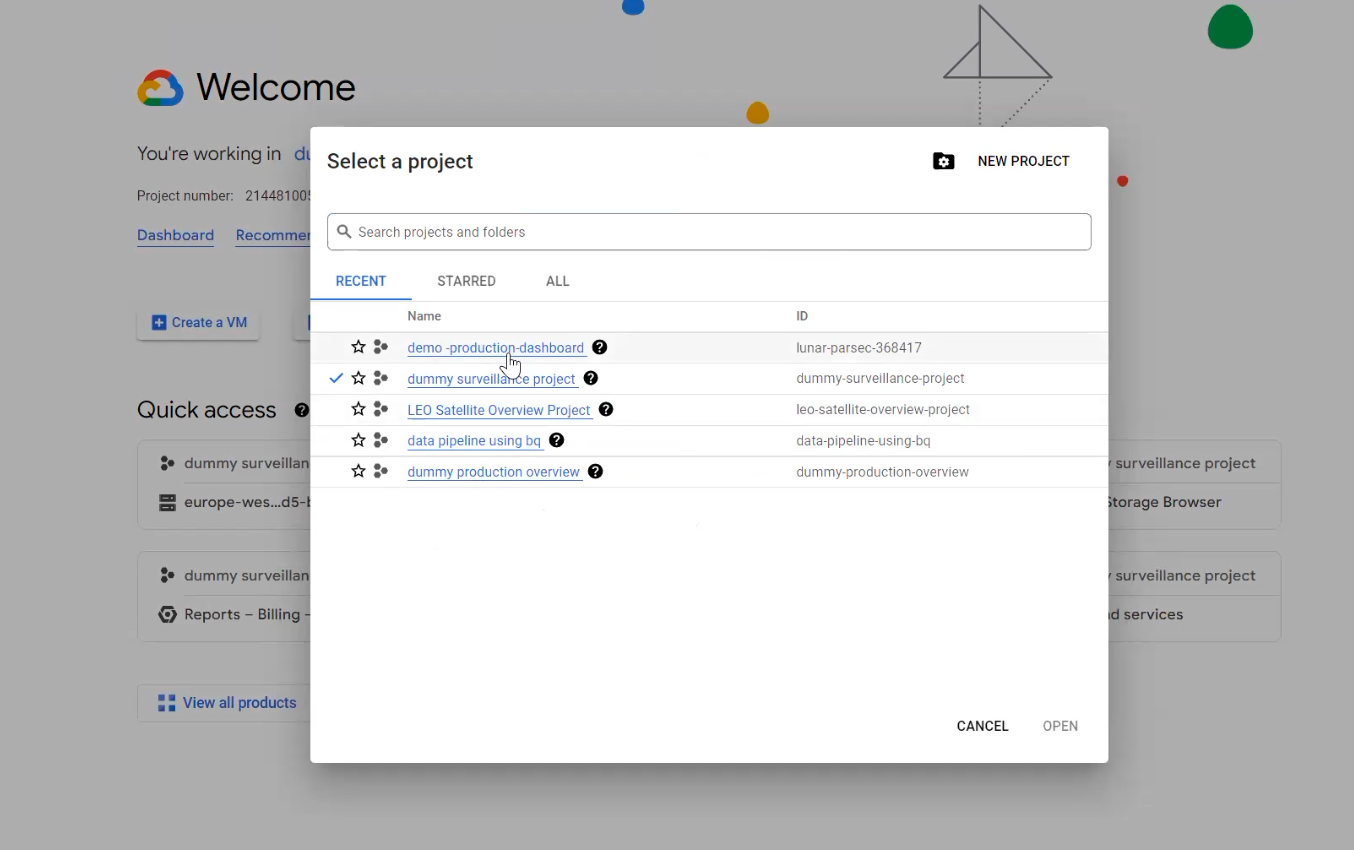

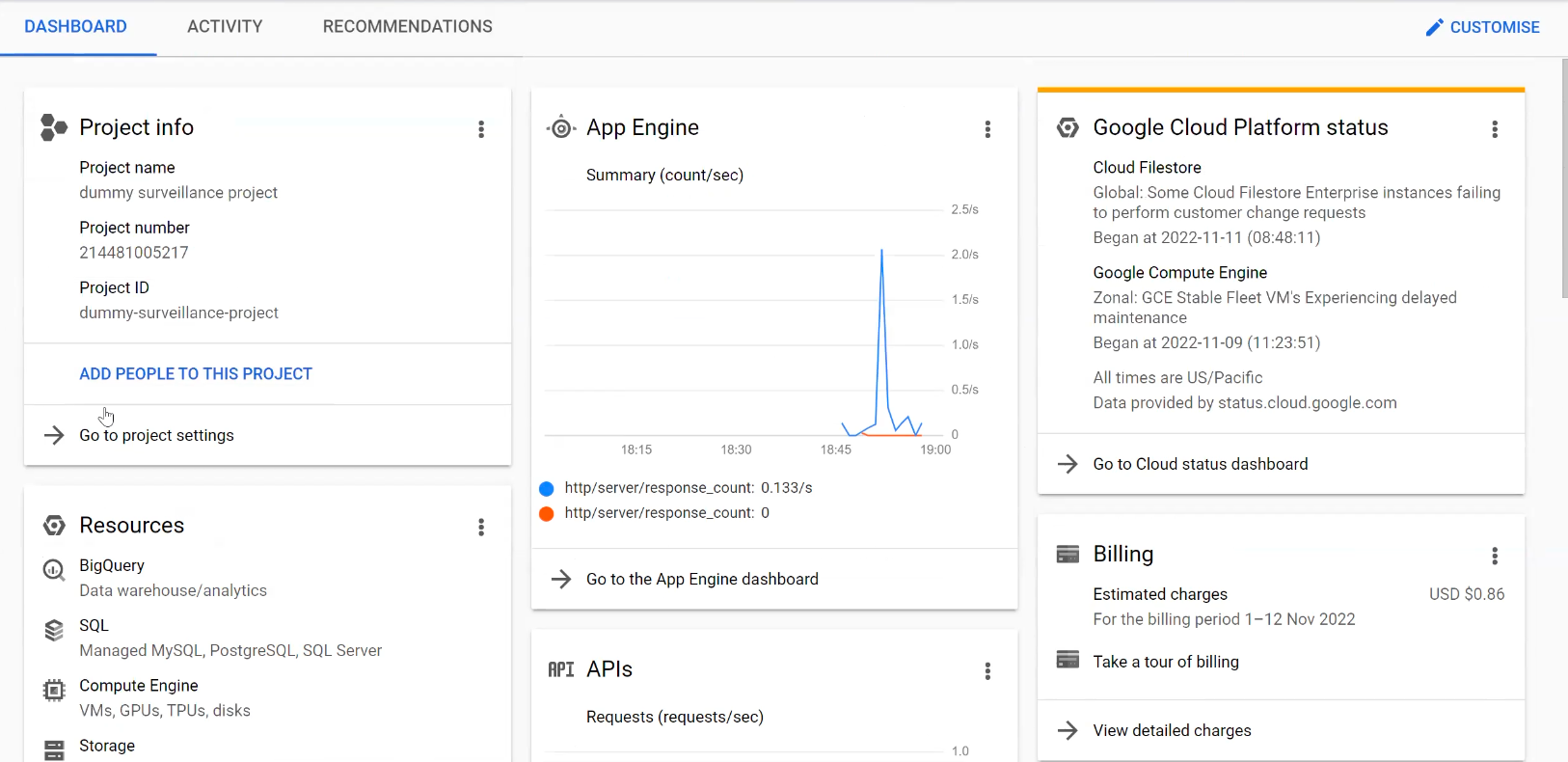

- Next, select the dashboard created to see all necessary information

- The project dashboard comes up with all the information about the resource that is available on GCP for this project.

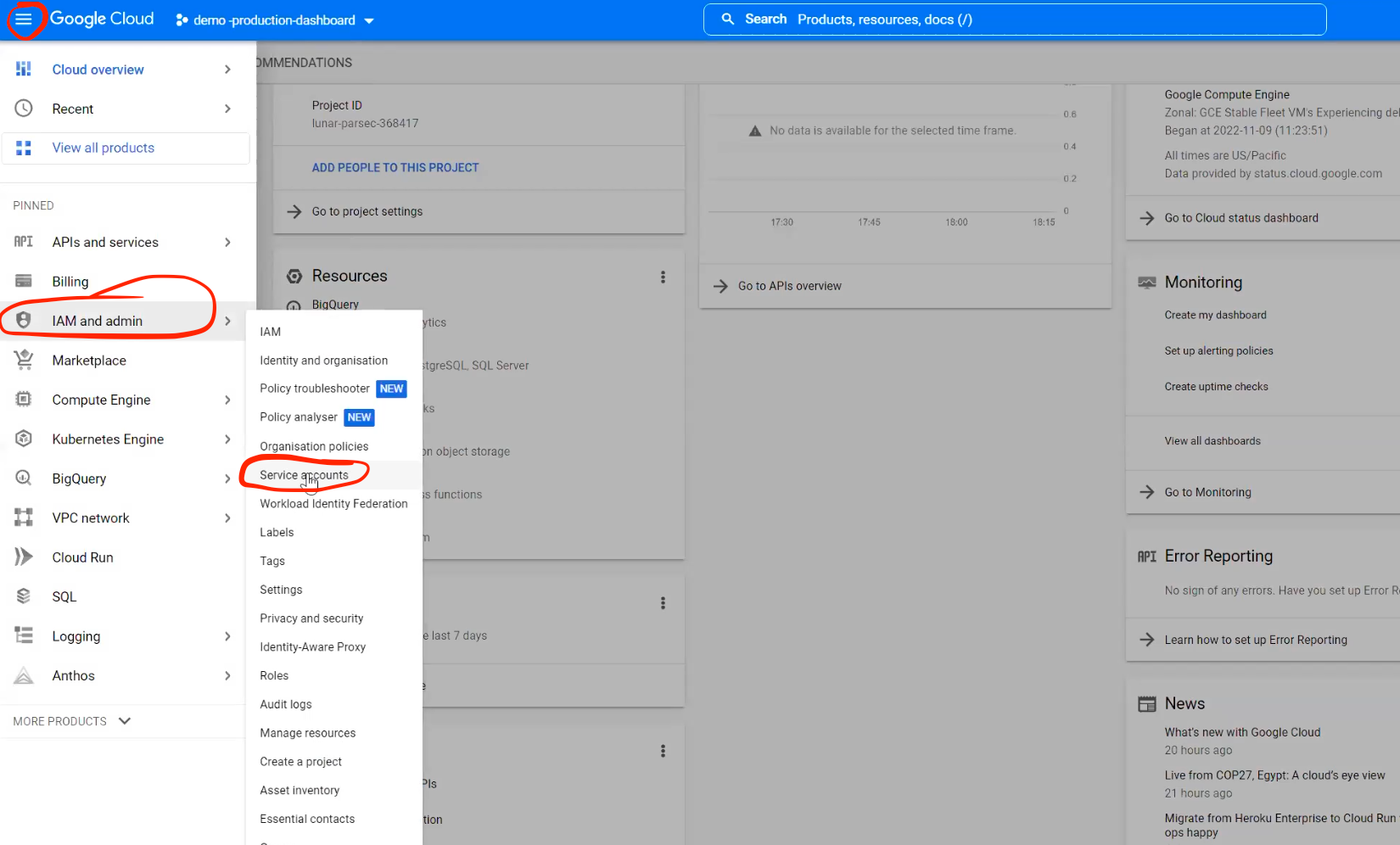

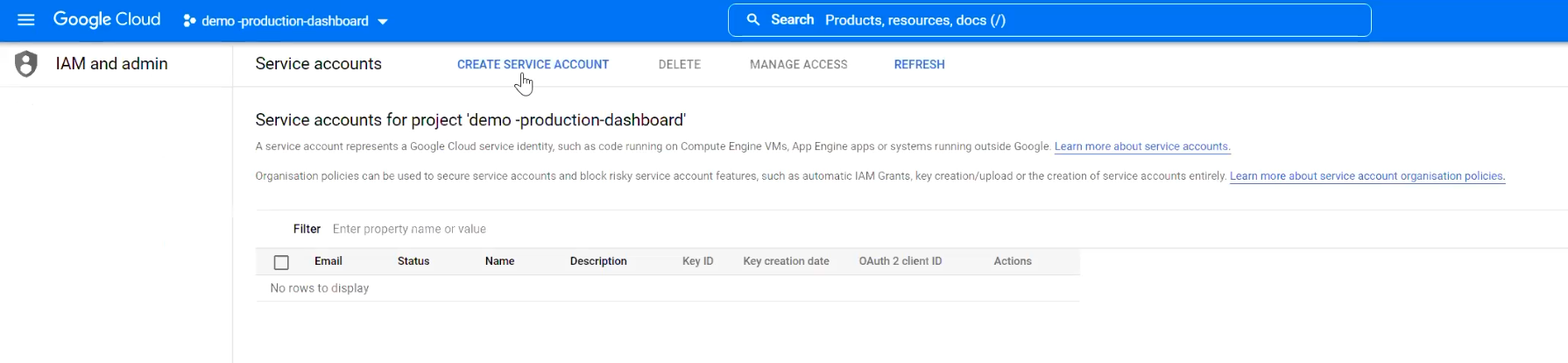

- Next, a service account must be created in order to get the required keys to connect the IDE (integrated development environment) to GCP

- Click on create a service account and this allows to input some information

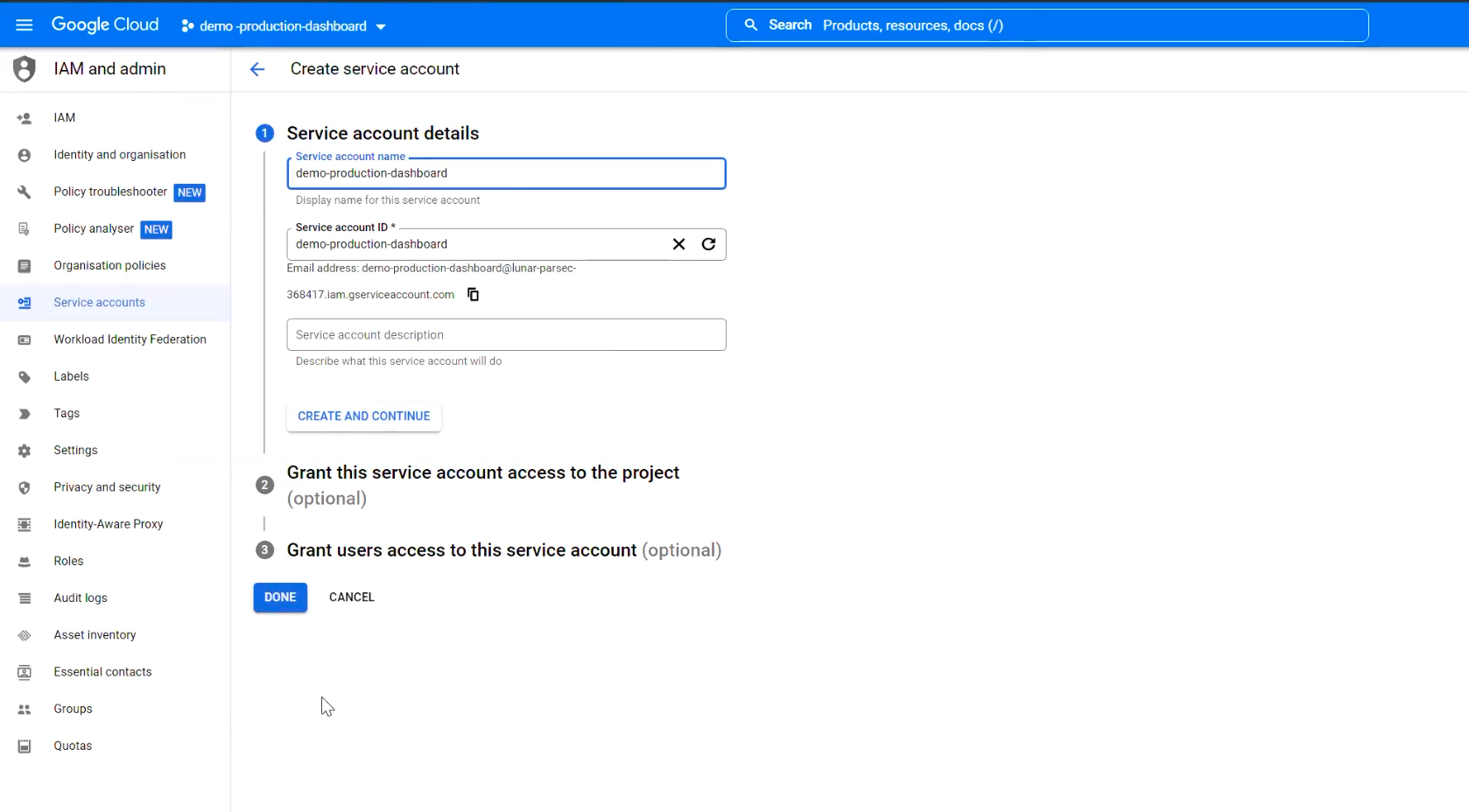

- Next, input the service account name and configure the access (owner and editor)

- Create a key, which will generate a json file that will be saved on your computer. This key can be used to connect to your IDE.

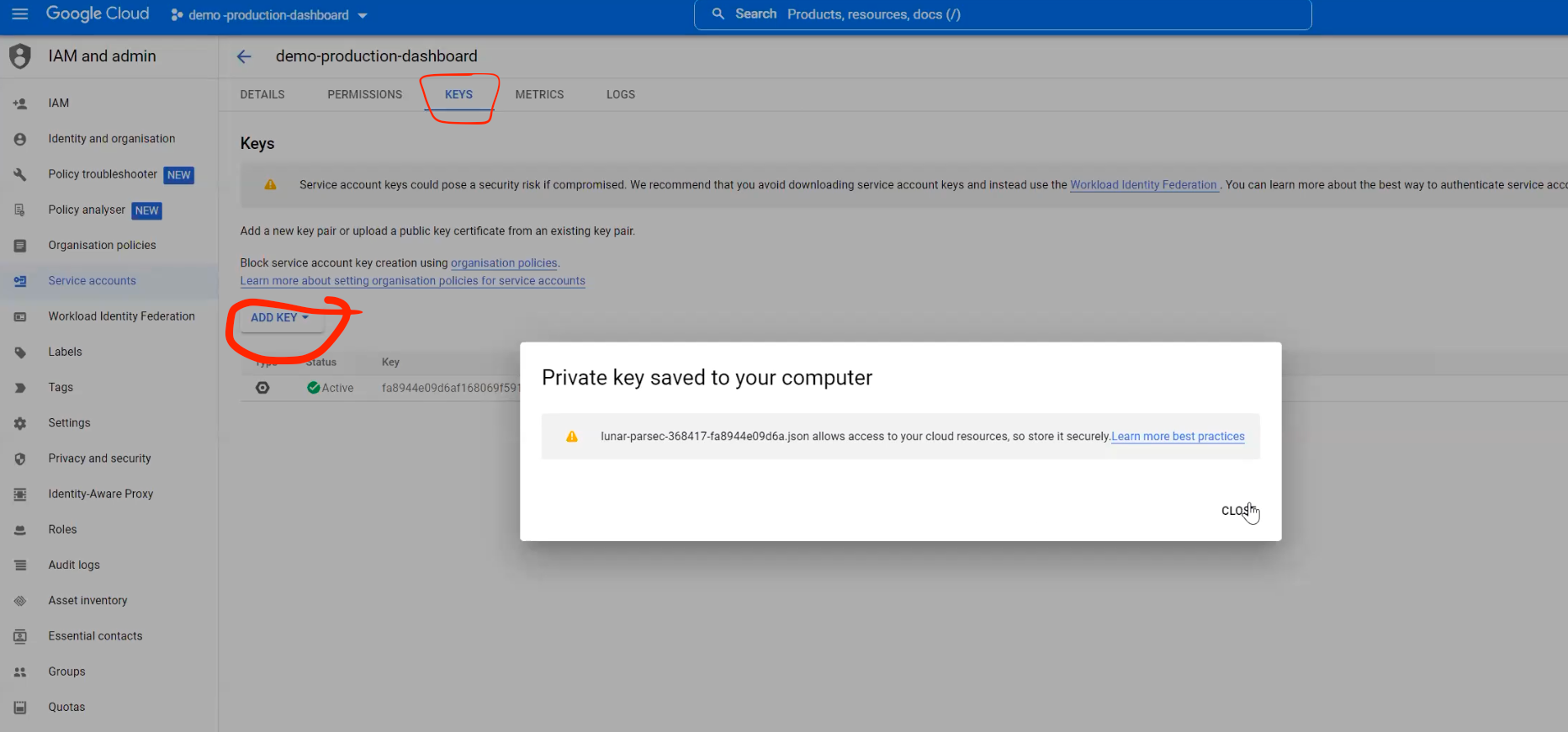

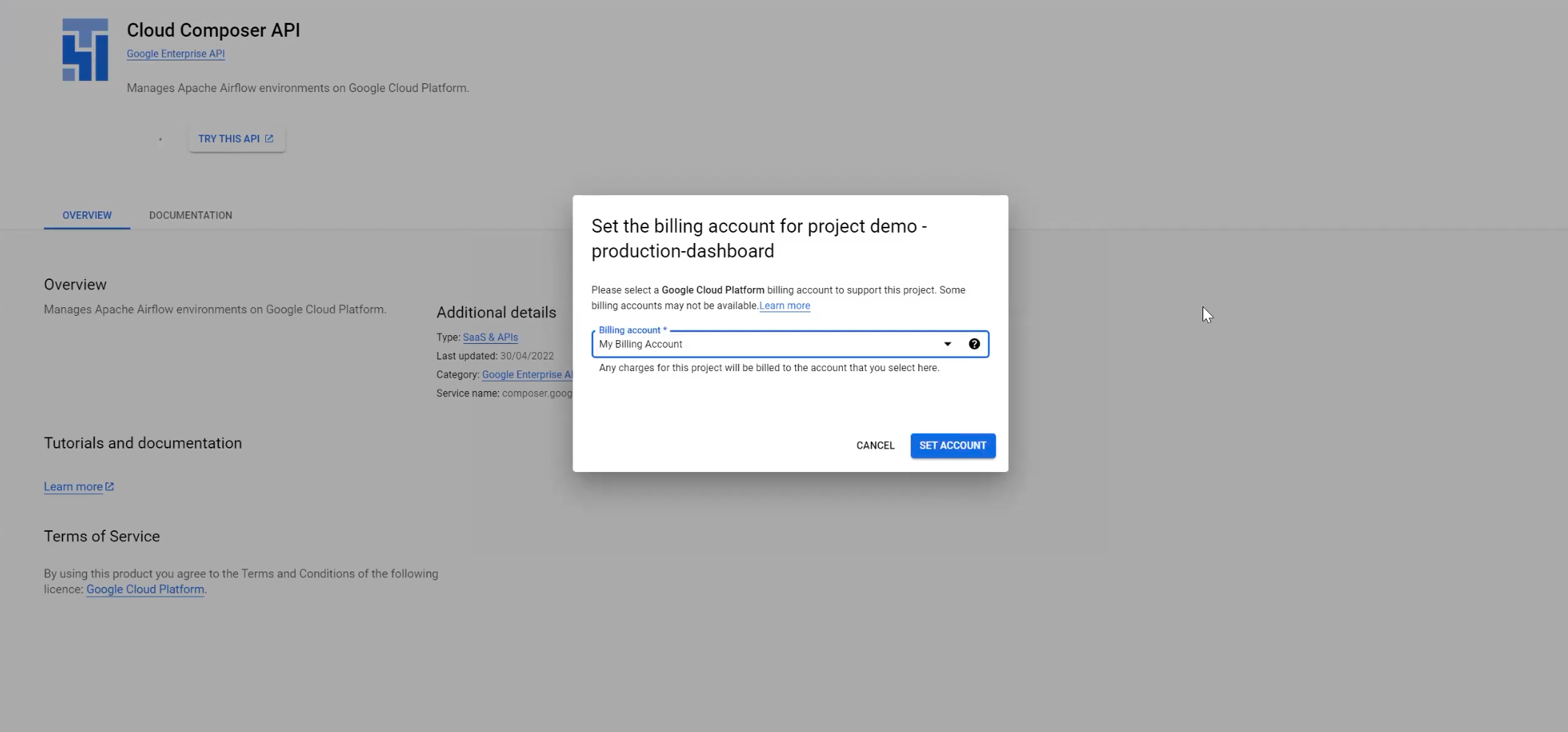

- Next, create a composer that allow you to run the entire ETL (extract, transform and load) in GCP using airflow

- This will also bring a prompt to enable billing for the cloud composer API

Parameters for each well in a producing oilfield

- Production rate (oil, gas and water): This is the rate at which oil is being produced from the well, typically measured in barrels per day (BPD) or cubic meters per day (CMD).

- Pressure: Pressure is an important parameter that can affect the flow of oil from the reservoir to the well. Pressure can be measured at various points in the production process, including at the wellhead, within the wellbore, and in the reservoir.

- Choke size, basic sediment & water: Choke size refers to the opening size of a valve used to control fluid flow. Basic sediment refers to solid particles that settle at the bottom of fluids. Water content is crucial to monitor in oil or gas streams.

- Gas-oil ratio (GOR): GOR is the amount of natural gas produced along with the oil. A high GOR can indicate that the reservoir is producing more gas than oil, which can affect production rates and the composition of the produced fluid stream.

- Uptime: This refers to the amount of time that equipment or facilities are operational and available for production or processing activities. High uptime is crucial for maximizing production and minimizing downtime, which can result in lost revenue and increased costs.

Dashboard building process requirements and Iterations

- Data needs to be dynamically updated on the surveillance dashboard to be created

- The dashboard can answer some common questions always asked

- The web framework for the dashboard needs to be deployed

Building process

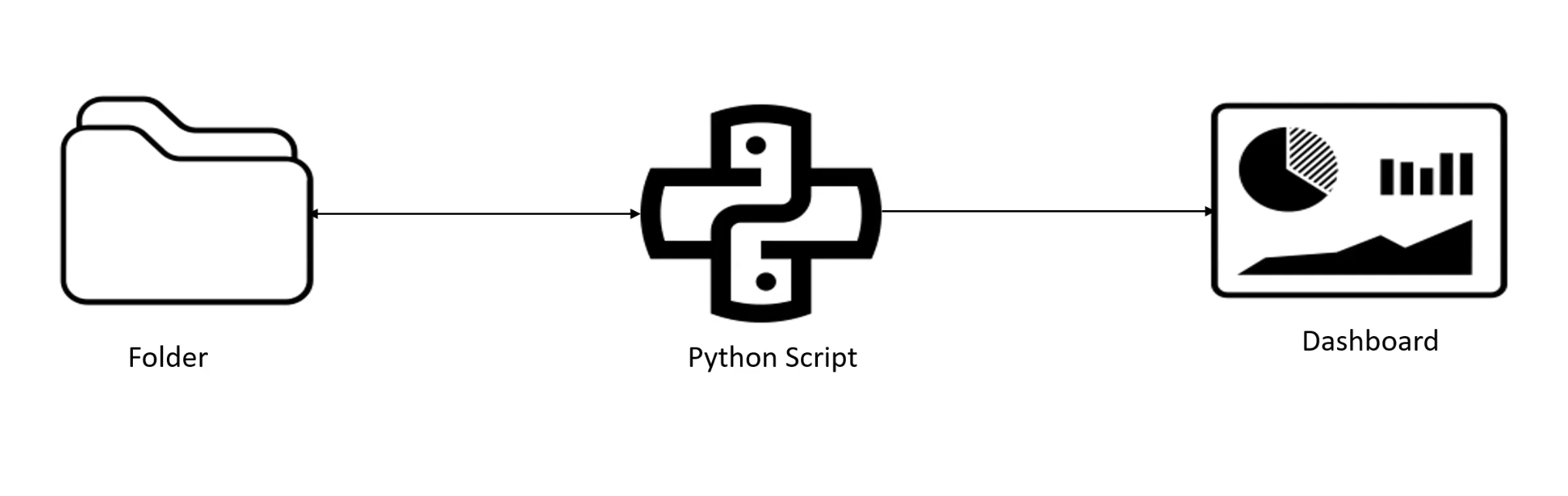

First iteration - Extraction of three (3) month worth of data using python and then leveraging dash and plotly to create the surveillance dashboard visualization still with the python script.

The interactive dashboard with dash and plotly

Limitations of the first iteration

- I had to manually run the script each time I added a new daily report to the folder

- The dashboard output only runs locally on my computer

- I can sure do better with the dashboard output in terms of layout and presentation of the visuals

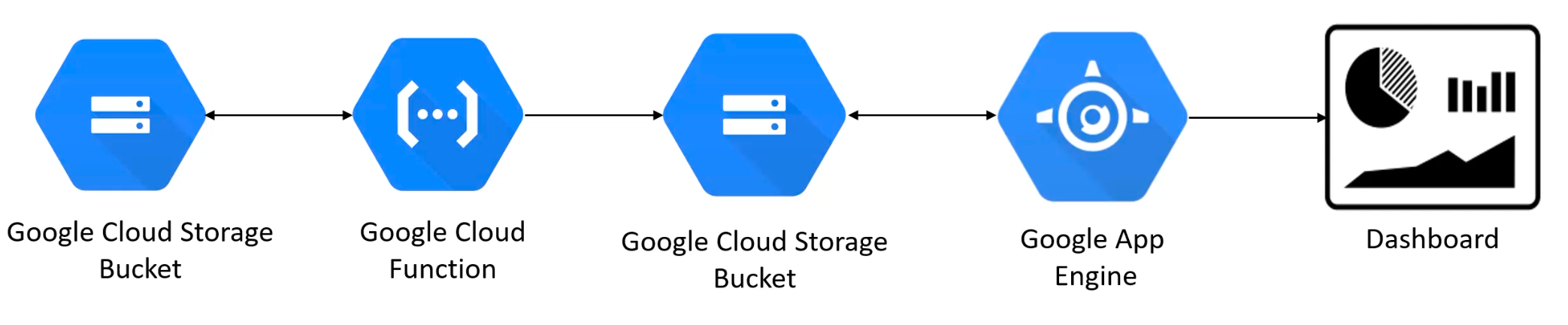

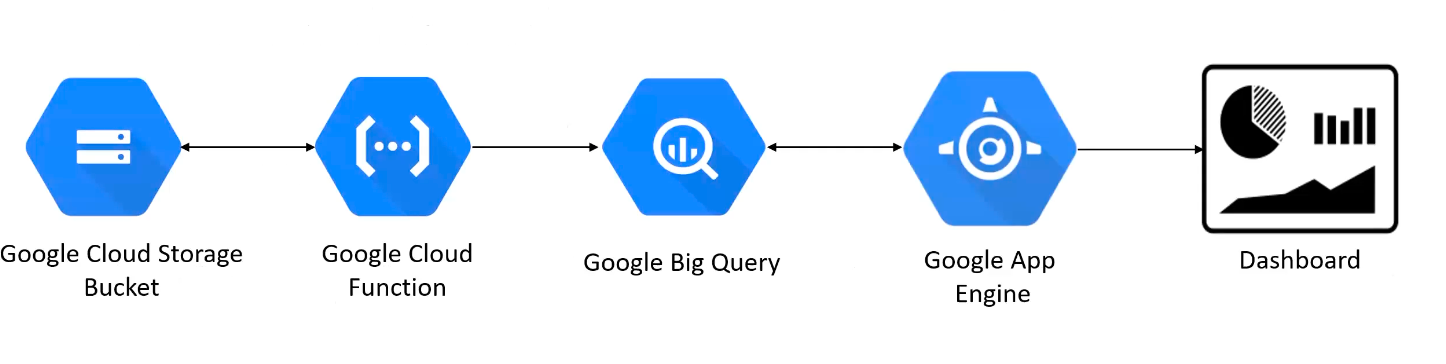

Second iteration - Moving all the production files from a local storage to a cloud storage bucket, then the function next to it help to extract and transform the data and then moves to the next bucket for visualization. When a new file come into the cloud bucket, the cloud function runs and perform the ETL operation again.

The Google App Engine helps to deploy web application in GCP. This is a google product that function as a service to avoid external applications for deployments*

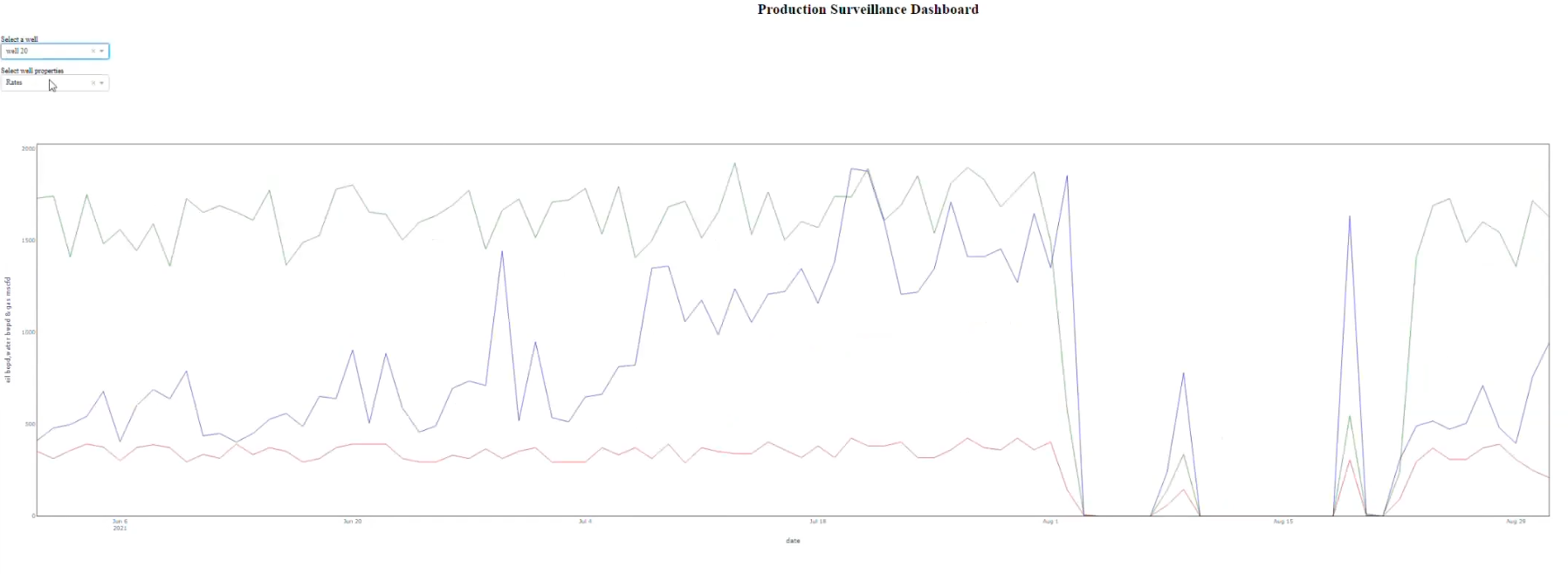

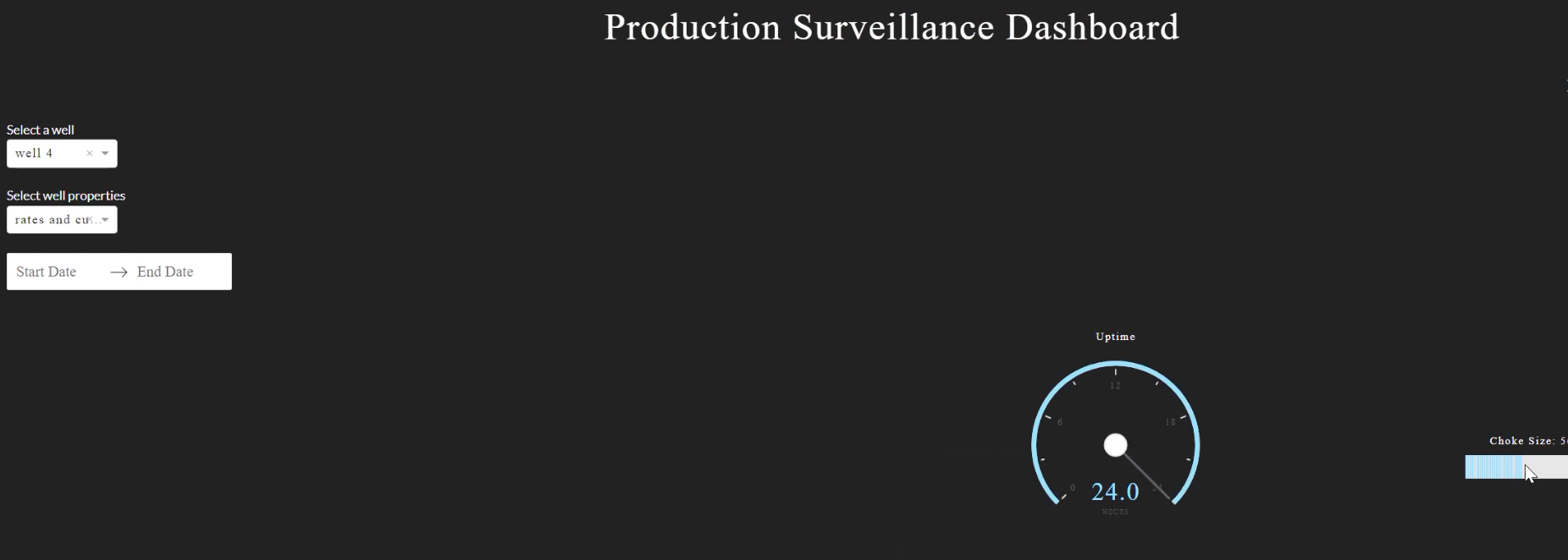

- The final dashboard that is created in this second iteration process allow to select the specific well, the well properties and the date I want to deal with.

- This then generate the oil production rate, gas production rate, water production rate and the cumulative production in a bid to aid the optimization

Limitations of the second iteration

- Every time the python scripts runs as a result of a new daily report added to the first google cloud storage bucket, it reads the entire files in the bucket all over

- Storing the transformed data as an excel file in the second cloud storage means the data cannot be queried for analysis

- While the dashboard output works very fine for additional (same wells) data from the subsequent daily reports, it sure won’t capture data from a new well.

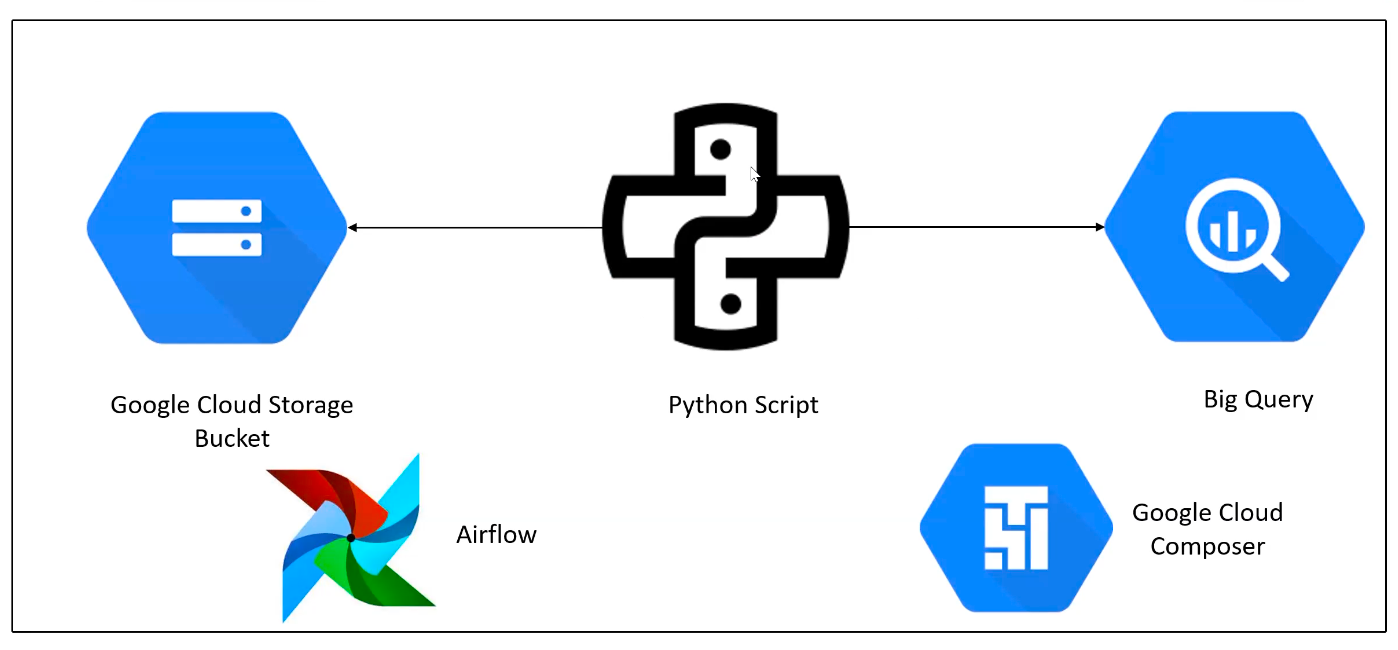

Update iteration - This is simply implementing an enterprise data warehousing feature for all data which allows for querying the data even as new file are added to the cloud bucket, but all other iterations in the pipeline remains the same. Also, the cloud function unlike in the previous iterations now only read the new file and append to the existing one for processing

Demo of the update pipeline

Implementation of the project in Google Cloud Platform (GCP) and Python using Jupyter Notebook

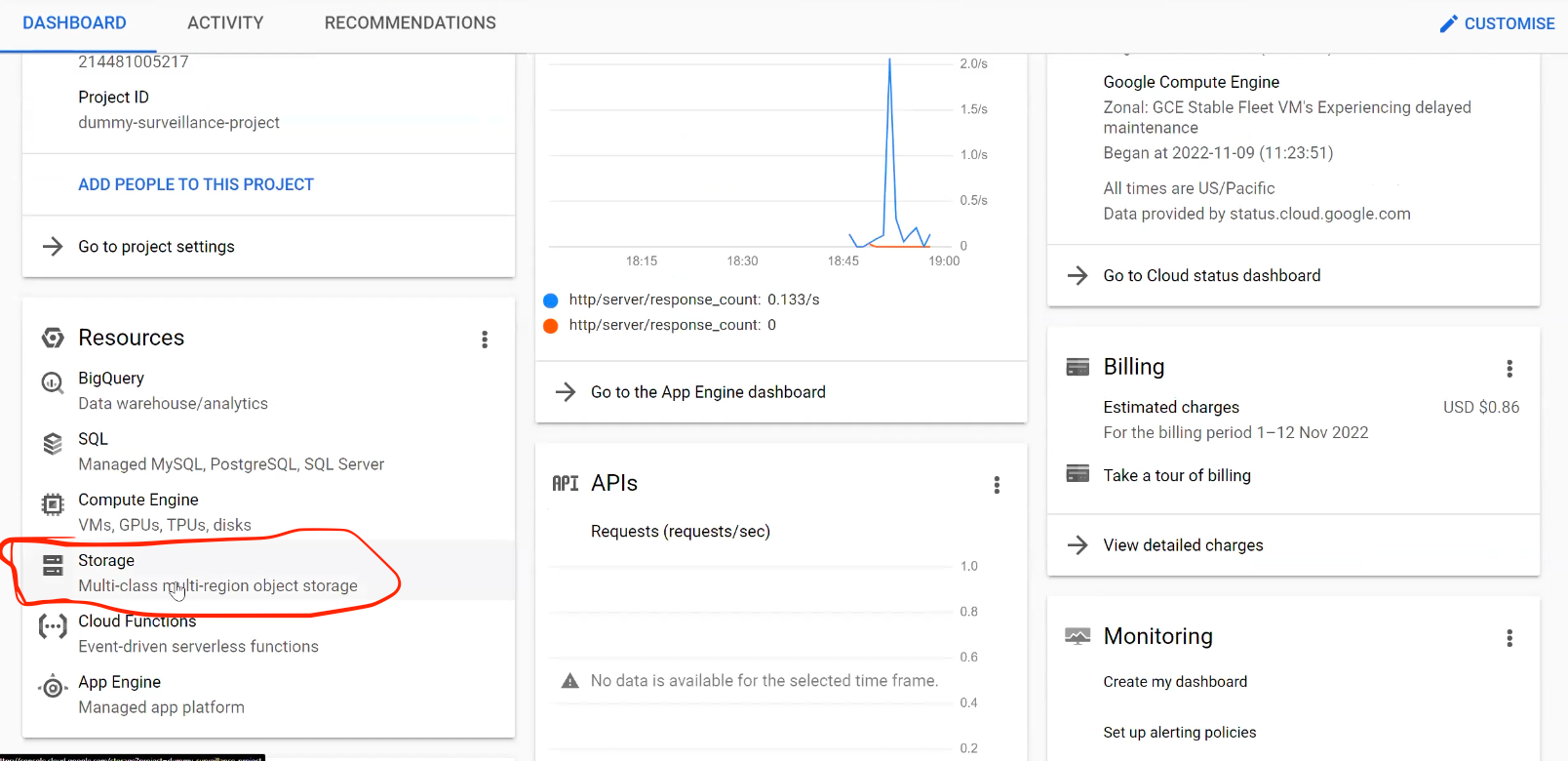

- After creating a google cloud platform, go to the dashboard associated with the project initiated.

Note: For this project, I will be leveraging an existing project already created on the GCP platform

- To Create a bucket, select storage to do so.

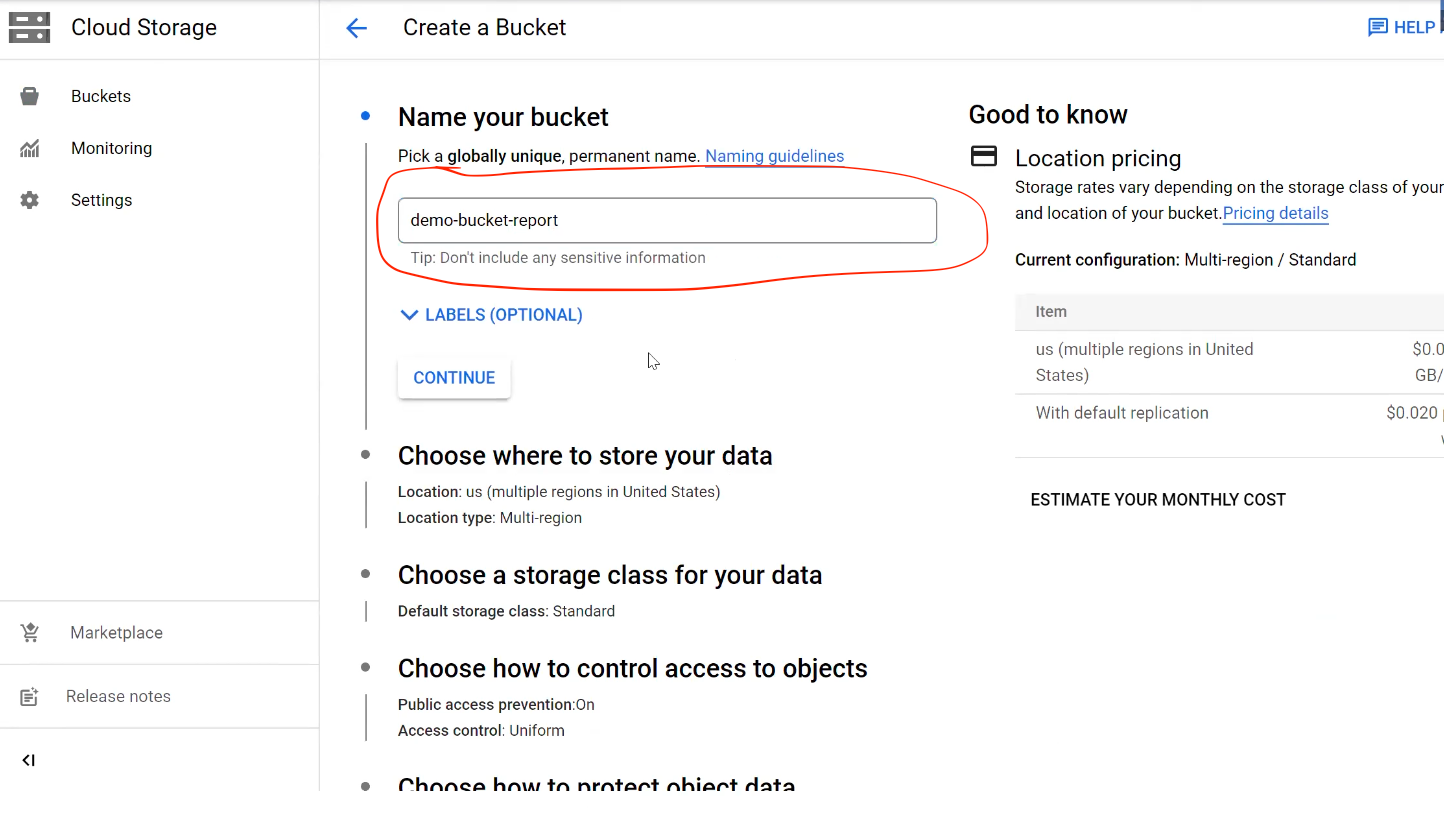

- This bring up the prompt, select “create” button which brings up the navigator for details about the bucket.

- Fill in the required details to create the bucket, such as the unique name of the bucket, region where you want the bucket to be held, choose a storage class (choose preference based on frequency of usage), choose how to control access to objects and how to protect object data.

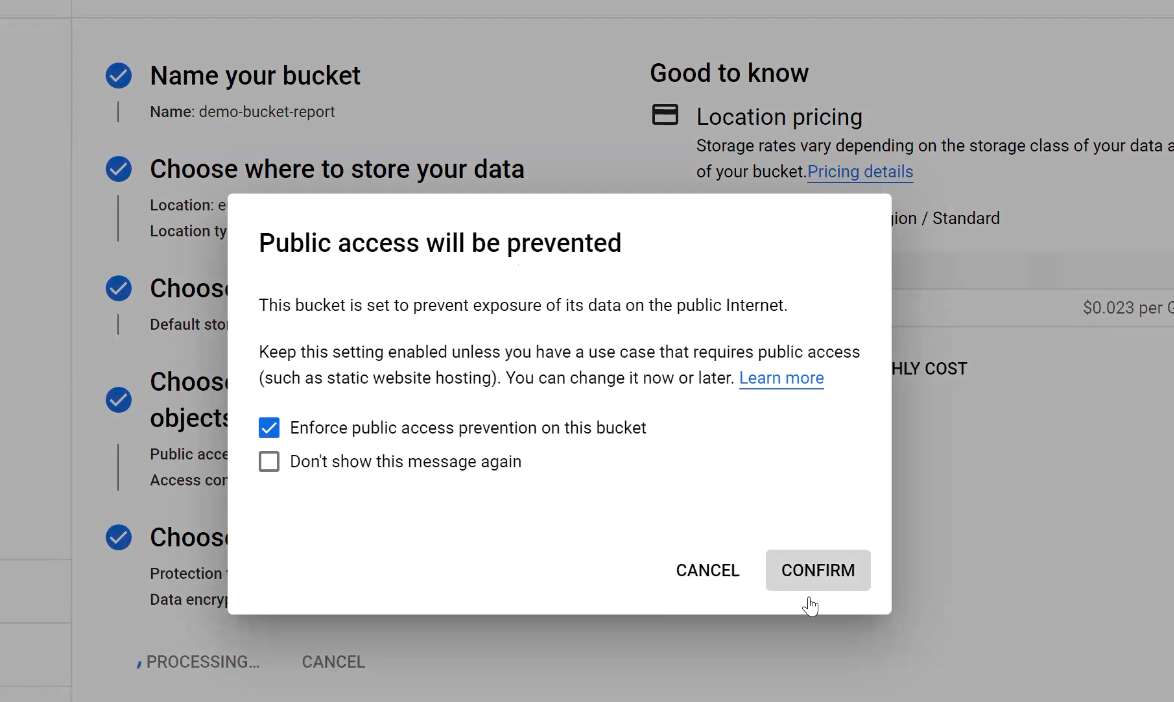

- After, filling all the required details above, click the create and confirm button to complete the bucket creation.

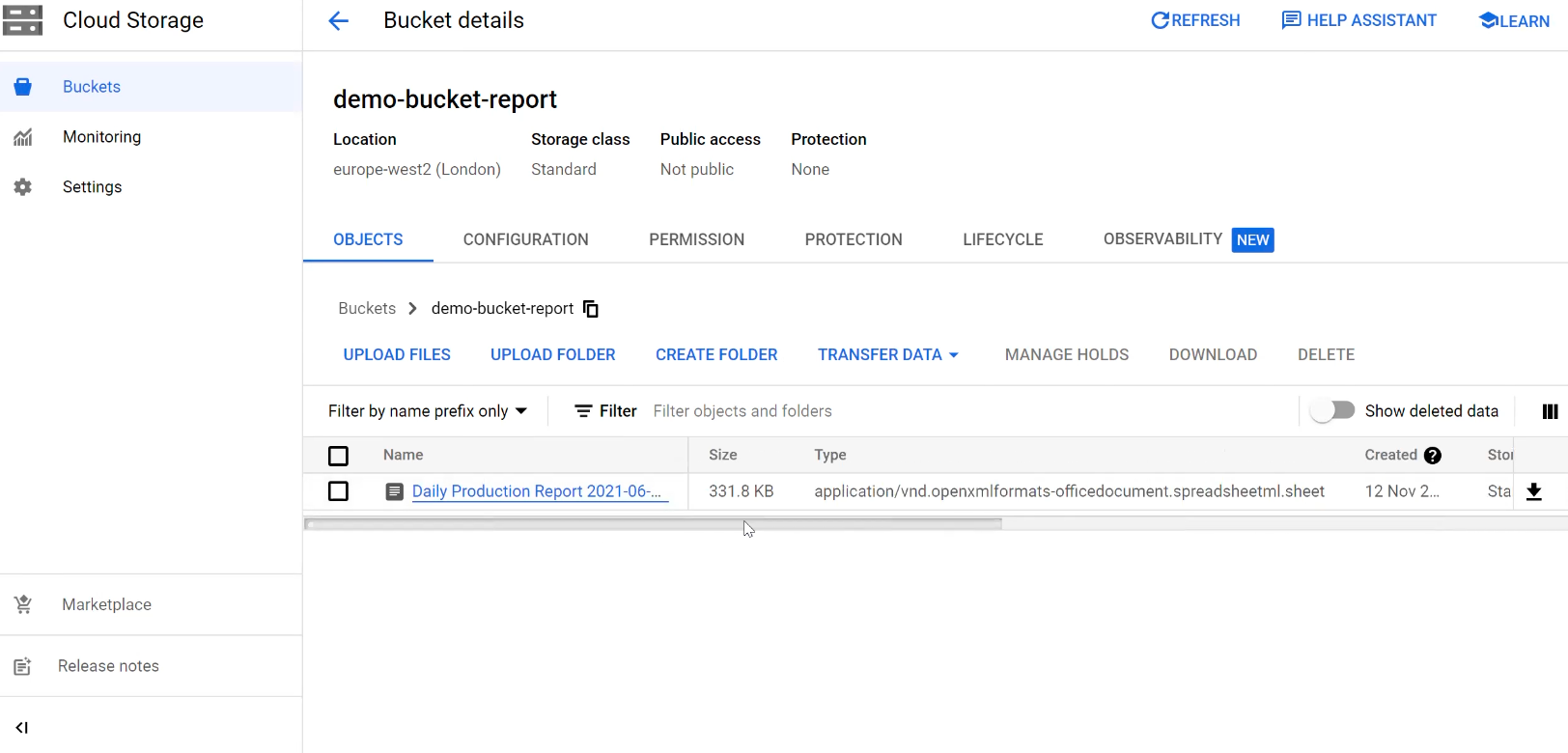

- The created bucket looks like this, after selecting confirm.

- Proceed to upload the files to be stored in the bucket, by selecting it from demo daily report folder (I will be uploading the first data only for now from my local computer)

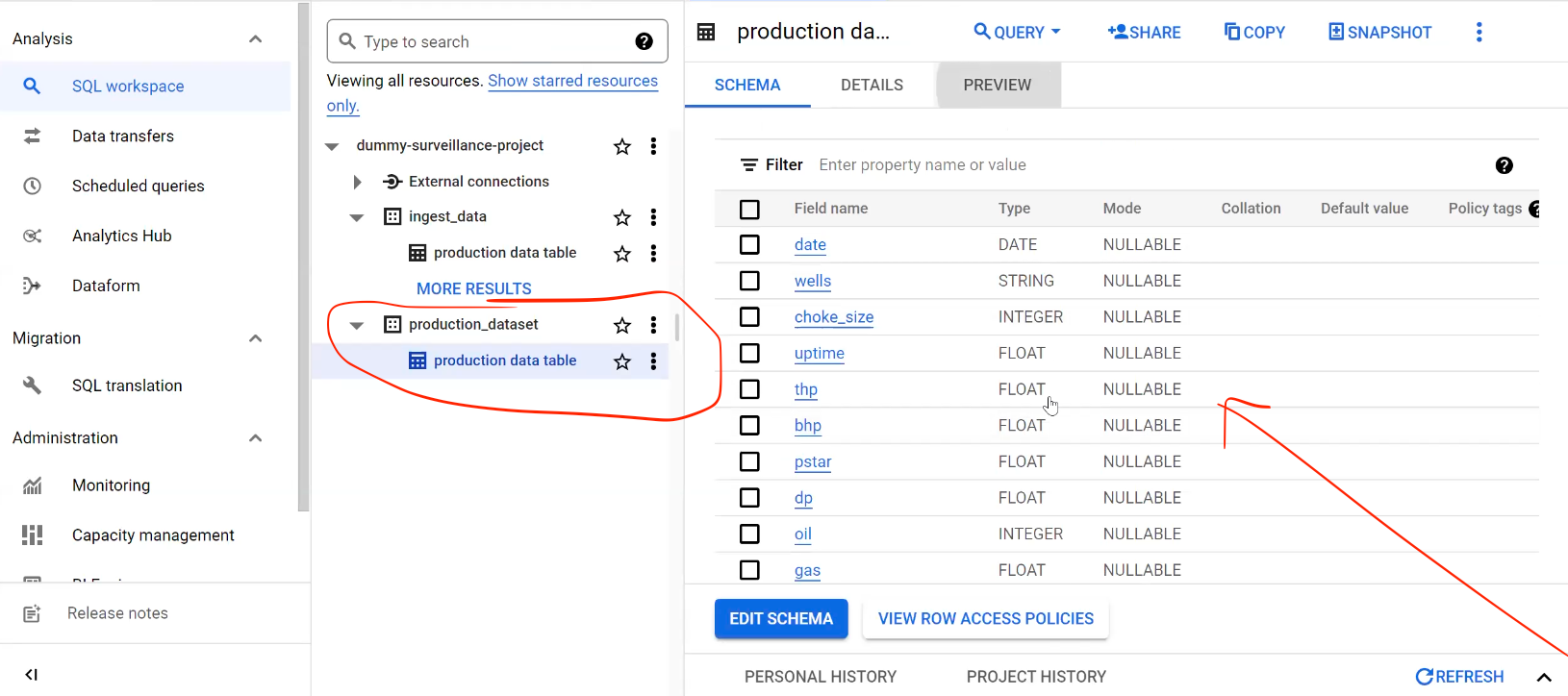

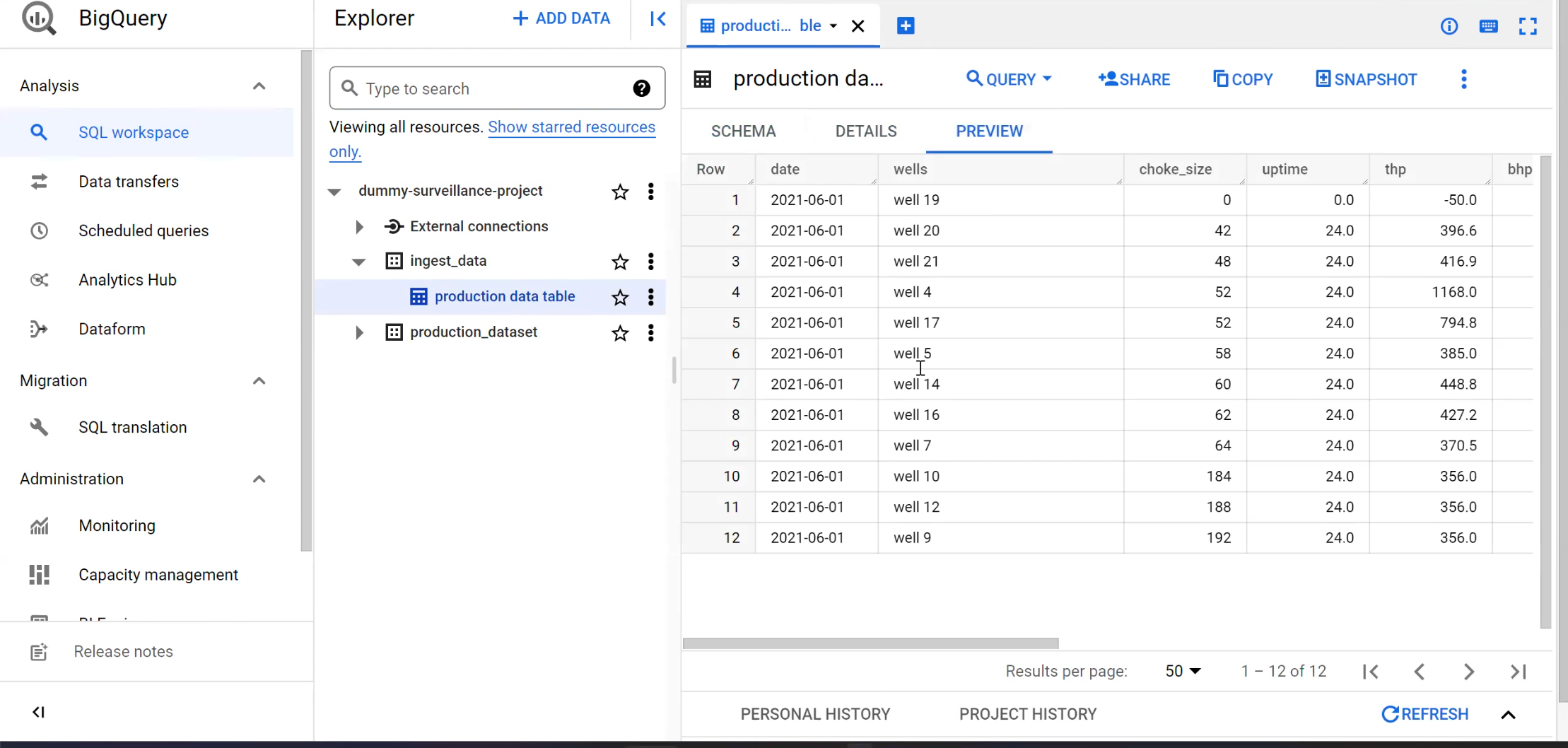

- Next, create a table in BigQuery. This is done by going back to the GCP dashboard by clicking the “Google Cloud” in the top left corner and then select “BigQuery”

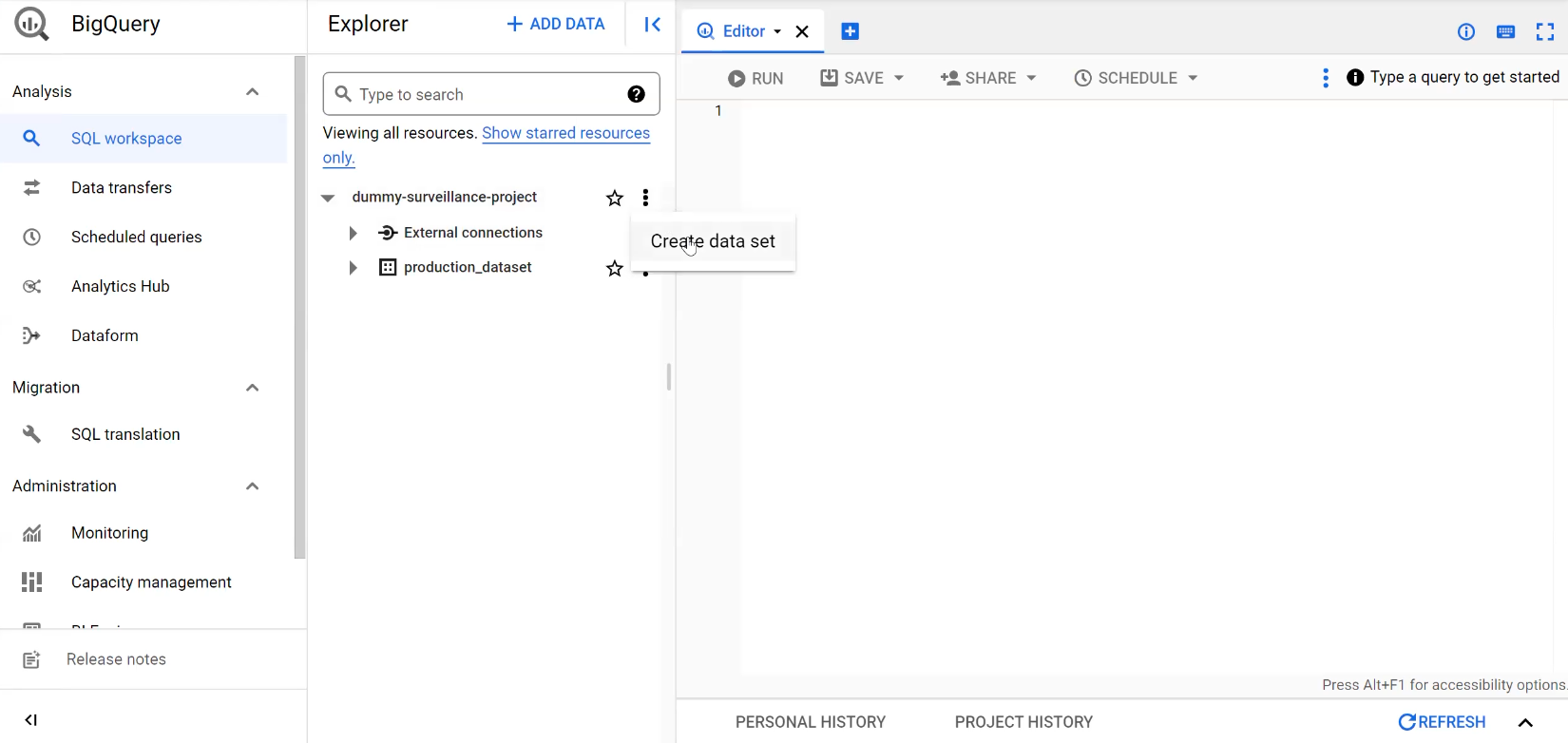

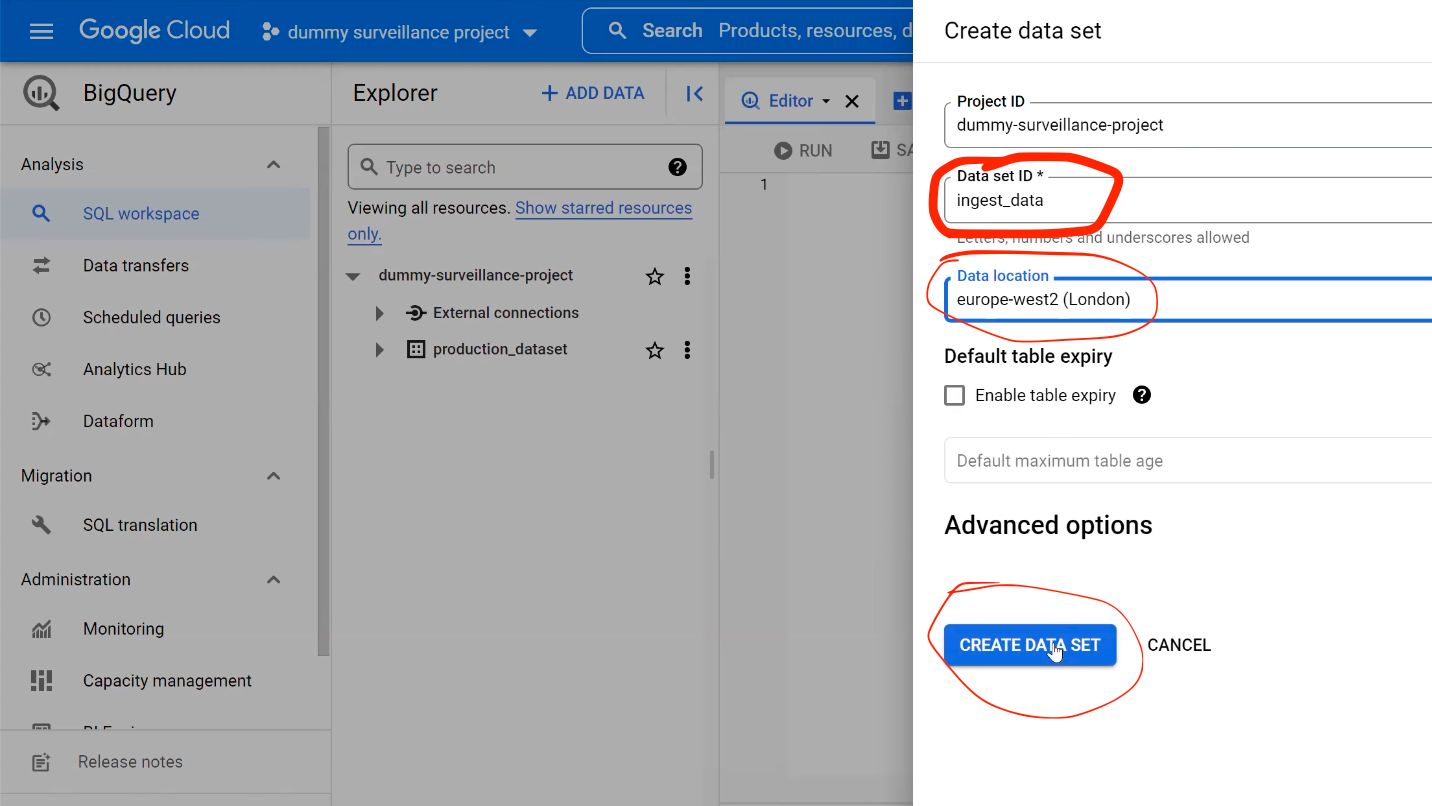

- This brings up a view like this. Expand on the project name and create dataset

- Create a dataset ID and select the location where the data is to be stored. Then, create the data set.

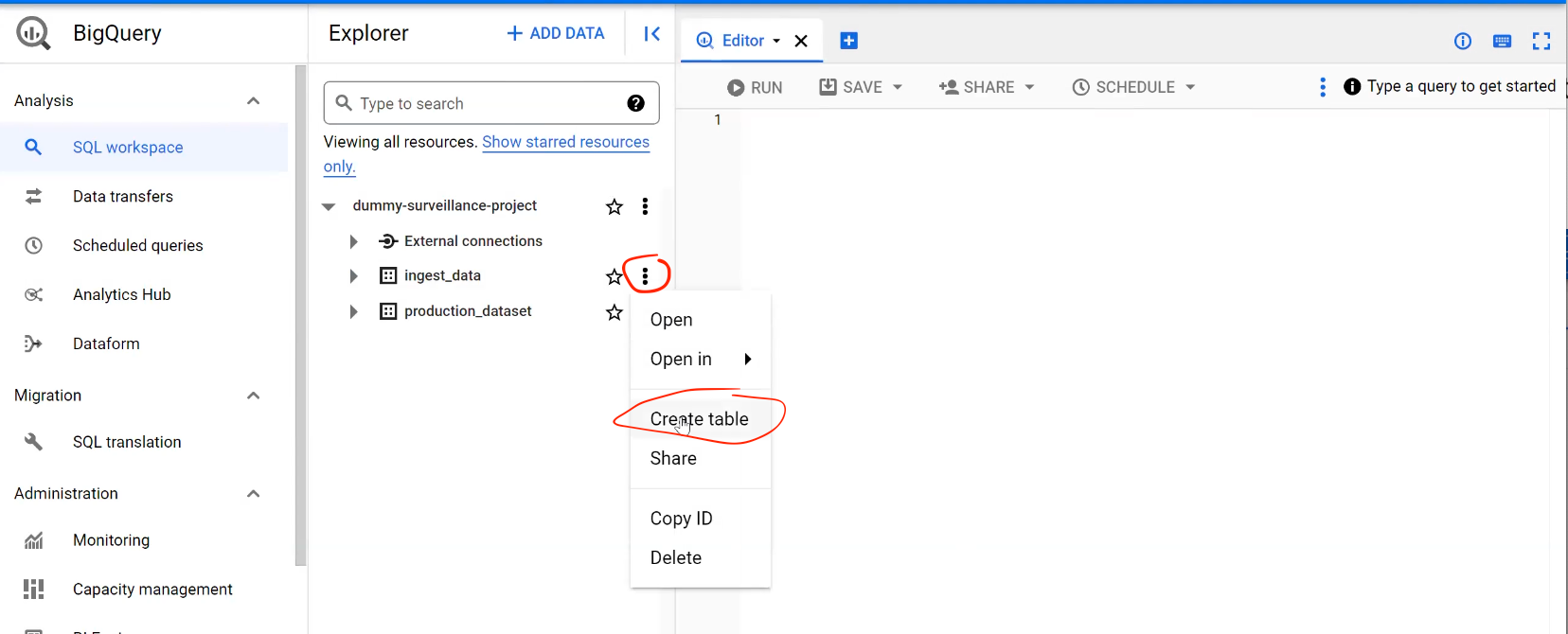

- After creating the data set, the next thing is to create a table by expanding the “ingest data” created to create a table where the data will be stored.

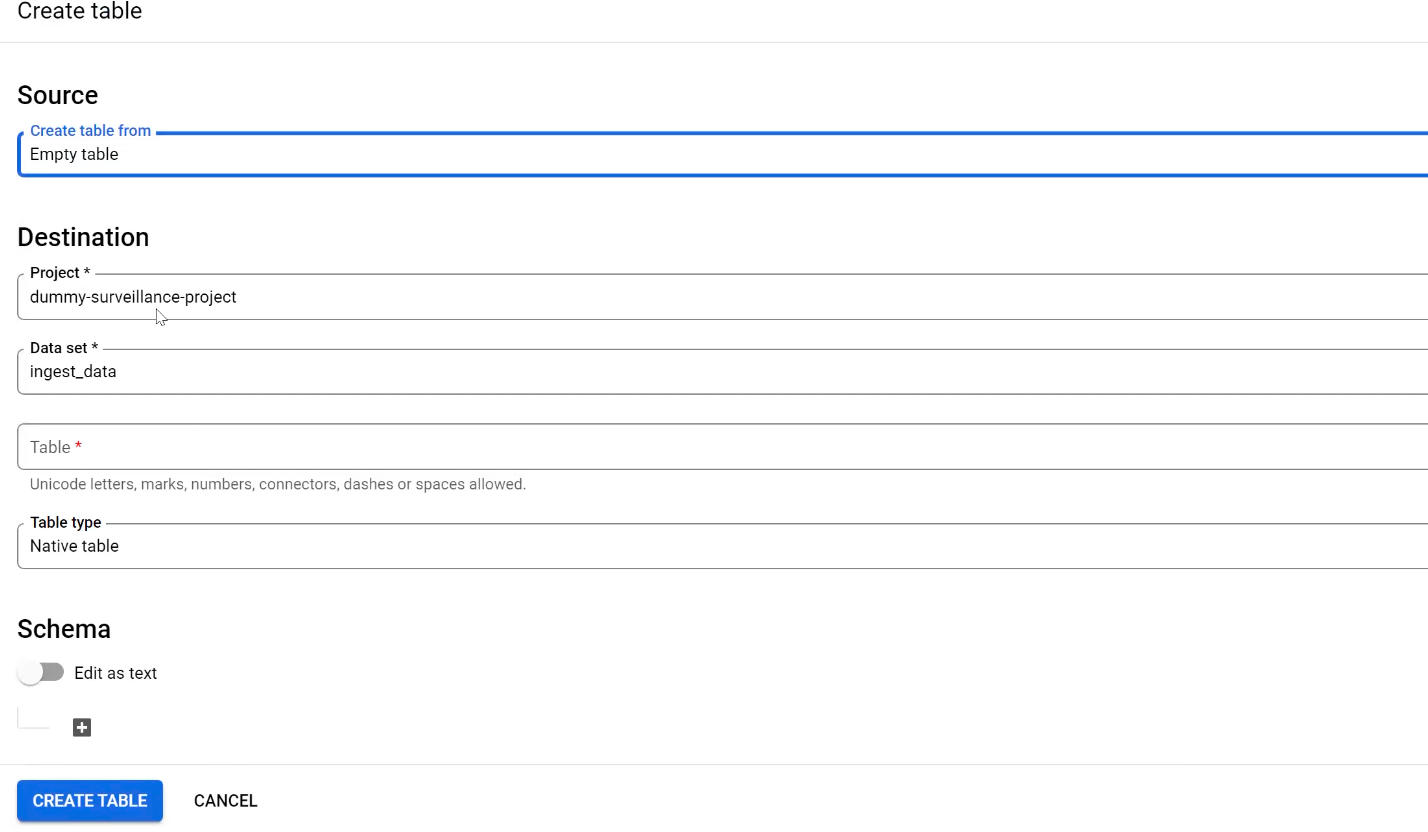

- This bring up a navigator for the details of the table to be created. Create an empty table, with native table as the table type.

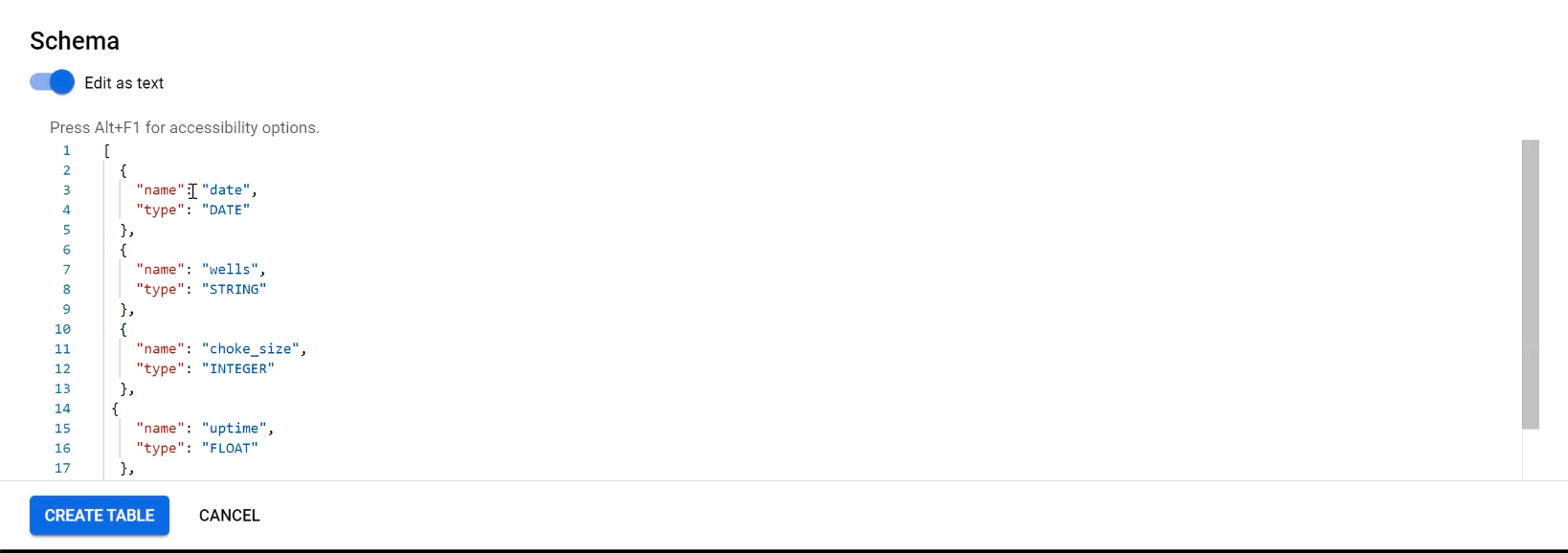

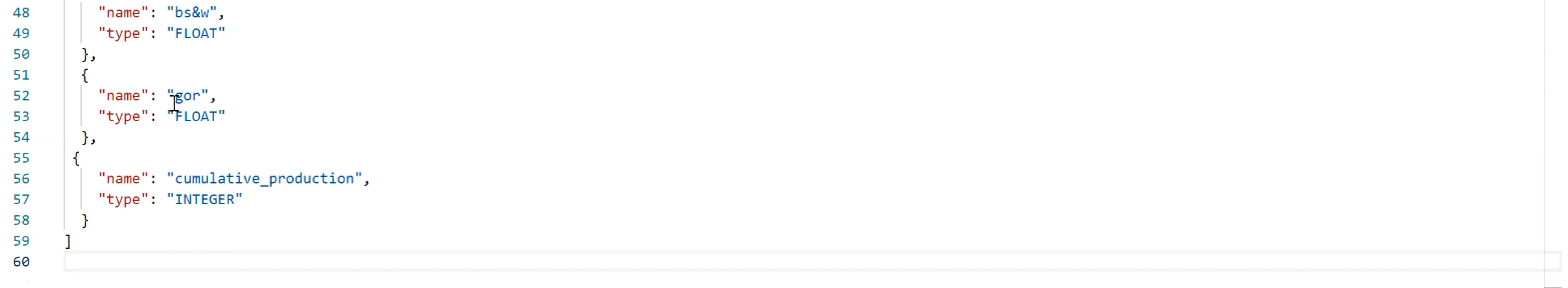

- Turn on the schema and create the columns and the data types of each columns

- Then, the partition and cluster settings and advanced options- which are not required for this project. Then, select “create” to create the production table as highlighted in the image below.

Implementation of the project in Google Cloud Platform (GCP) and Python using Jupyter Notebook

Note: To automate getting our local files into our GCP bucket using python in a Jupyter Notebook, the following code implementation is necessary.

- Importing libraries necessary to connect to GCP, BigQuery and other necessary application for the project.

# Importing libaries

from google.cloud import storage

import pandas as pd

import datetime

import xlrd

import openpyxl

import fsspec

import gcsfs

import itertools

import os

import pandas_gbq

from google.cloud import bigquery

from pandas.api.types import CategoricalDtype

import time- Write a function to impute the services account details known as the google application credential in a bid to grant python access to the project. Also, to access the bucket created and append the other local files on the computer

def latest_file():

# Service account json file required

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = r"C:\Users\Oladayo\Desktop\demo\dummy-surveillance-project-6915f229d012.json"

storage_client = storage.Client()

prefix = 'Report'

blobs = storage_client.list_blobs('demo-bucket-report', prefix = prefix)

blob_created_time = []

for blob in blobs:

blob_created_time.append(blob.updated)

if blob.updated == max(blob_created_time):

latest_file_name = blob.name

return latest_file_name- Next, call the function created above to see the files in the bucket. This will return the latest file in that bucket on GCP

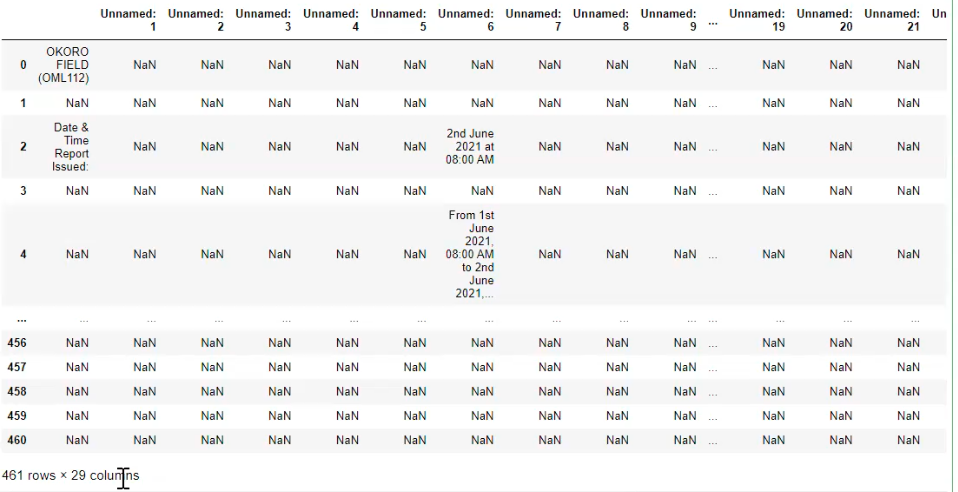

latest_file()- Next, read the file in the bucket using the pandas library

def read_latest_file():

df = pd.read_excel('gs://field-reports/{}'.format(latest_file()))

return df- Check if the file was properly read into the Jupyter notebook by calling the function

read_latest_file()The result bring a table that looks like this…

- Next, extract the date and well data from the data imported above.

def extract_date_and_well_data():

date_list = []

wells_list = []

date_list.append(read_latest_file().iloc[2,6])

wells_list.append(read_latest_file().iloc[33:47, 0:19])

K = 12

date_list = list(itertools.chain.from_iterable(itertools.repeat(i, K) for i in date_list))

return date_list, wells_list- Next, cleaning the date extracted in a bid to plot time series data and the result is converted to a dataframe

def date_data_cleaning():

date_df = pd.DataFrame(extract_date_and_well_data()[0])

date_df.columns = ['next_date']

date_df['next_date'] = date_df['next_date'].astype('string')

date_df['next_date'] = date_df['next_date'].str.replace(' at 08:00 AM',' ')

date_df['next_date'] = pd.to_datetime(date_df['next_date']).dt.date

date_df['date'] = (date_df['next_date'] - datetime.timedelta(days=1))

date_df.drop(['next_date'],axis = 1,inplace = True)

date_df = date_df.reset_index(drop = True)

return date_df The result bring a dataframe that looks like this…

- Next, cleaning the well data extracted and the result is converted to a dataframe

def wells_data_cleaning():

wells_df = pd.concat(extract_date_and_well_data()[1], axis = 0)

wells_df.drop([36,39],axis = 0, inplace = True)

wells_df.drop(['Unnamed: 2','Unnamed: 4','Unnamed: 6','Unnamed: 7','Unnamed: 12','Unnamed: 14',

'Unnamed: 17'],axis = 1,inplace = True)

wells_df = wells_df.reset_index(drop = True)

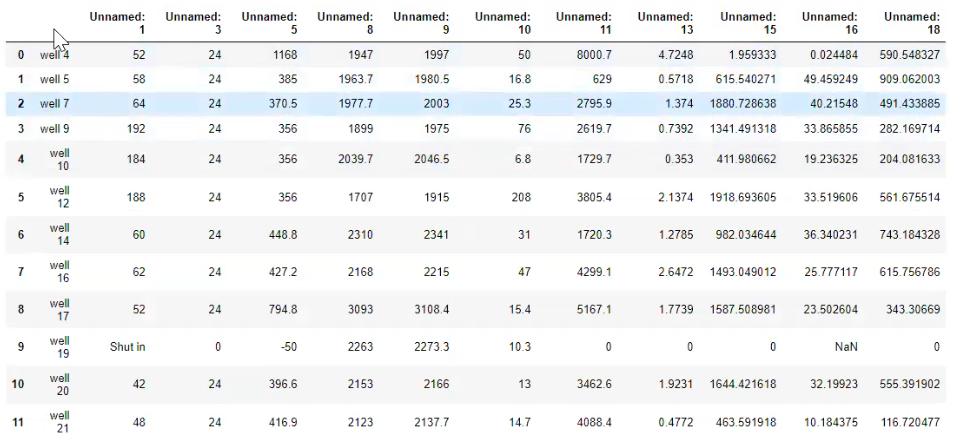

return wells_dfThe result bring a dataframe that looks like this…

- Next, after cleaning - combine the date dataframe and the cleaned well data datframe by writing a function (updated_well_data). In this function, the names of the columns will be properly formatted

def updated_wells_data():

wells_df2 = pd.concat([date_data_cleaning(), wells_data_cleaning()], axis = 1)

wells_df2.columns = ['date','wells','choke_size','uptime','thp','bhp','pstar','dp','oil',

'gas', 'water','bsw','gor']

wells_df2['date']=pd.to_datetime(wells_df2['date']).dt.date

wells_df2['choke_size']= wells_df2['choke_size'].astype(str).str.lower()

wells_df2['choke_size']= wells_df2['choke_size'].replace(['shut in'],0)

wells_df2[['choke_size','uptime', 'thp', 'bhp', 'pstar', 'dp', 'oil', 'gas', 'water', 'bsw', 'gor']] = wells_df2[['choke_size','uptime','thp',

'bhp', 'pstar', 'dp', 'oil', 'gas', 'water', 'bsw', 'gor']].apply(pd.to_numeric)

wells_df2[['oil', 'water', 'gor']] = wells_df2[['oil', 'water', 'gor']].round(0)

wells_df2[['thp', 'bhp', 'pstar', 'dp']] = wells_df2[['thp', 'bhp', 'pstar', 'dp']].round(1)

wells_df2[['gas', 'bsw']] = wells_df2[['gas', 'bsw']].round(2)

wells_df2['uptime'] =wells_df2['uptime'].astype(float)

wells_df2 = wells_df2.astype({'choke_size': 'int', 'oil':'int', 'water': 'int', 'gor': 'int'})

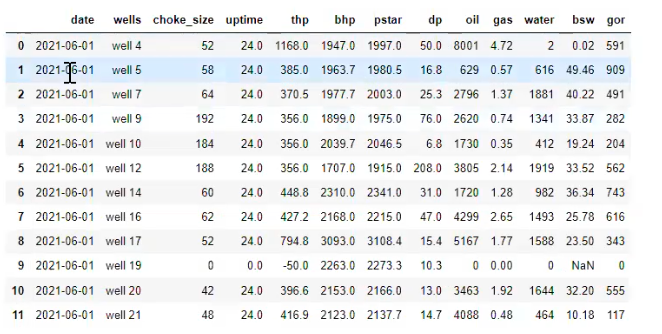

return wells_df2The result bring a dataframe that looks like this…

Note: Since I didn’t start from the beginning of the production data, I will bring in the cumulative production for the other wells with a function in a dictionary within the function.

def initial_cumulative_production():

cumulative_production = {'well 4' : 4500000, 'well 5' : 4000000, 'well 7' : 3000000, 'well 9' : 3750000,

'well 10' : 3200000, 'well 12': 2950000, 'well 14' : 2700000, 'well 16' : 2560000,

'well 17' : 2470000, 'well 19' : 2150000, 'well 20' : 900000, 'well 21' : 720000}

return cumulative_production- Next, reading the previous created BigQuery Table

def read_big_query_table():

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = r"C:\Users\Oladayo\Desktop\demo\dummy-surveillance-project-6915f229d012.json"

client = bigquery.Client()

query_string = "SELECT * FROM `dummy-surveillance-project.ingest_data.production data table` "

bq_table_df = pandas_gbq.read_gbq(query_string, project_id = 'dummy-surveillance-project')

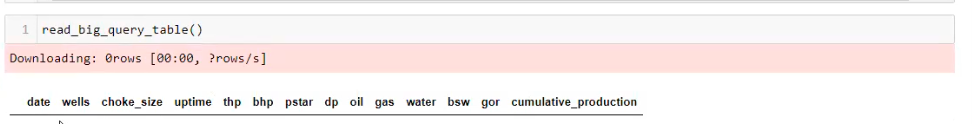

return bq_table_dfThis will give a result of all the columns names defined in the BigQuery table without any data in it.

- Next, bring in the final well data by combining without repetition to avoid redundant data.

def final_well_data():

bq_table_df = read_big_query_table()

final_wells_df = updated_wells_data()

wells_unique_name = list(final_wells_df ['wells'].unique())

well_order = CategoricalDtype(wells_unique_name, ordered = True)

if list(bq_table_df['wells'].unique()) == []:

final_wells_df['initial_cumulative_production'] = final_wells_df['wells'].map(initial_cumulative_production())

final_wells_df['cumulative_production'] = final_wells_df['initial_cumulative_production'] + final_wells_df['oil']

final_wells_df['cumulative_production'] = final_wells_df['cumulative_production'].astype(int)

final_wells_df.drop(['initial_cumulative_production'], axis = 1, inplace = True)

final_wells_df['wells'] = final_wells_df['wells'].astype(well_order)

final_wells_df.sort_values(['wells', 'date'], inplace = True)

return final_wells_df

else:

if final_wells_df['date'].unique() not in bq_table_df['date'].unique():

bq_table_df['wells'] = bq_table_df['wells'].astype(well_order)

bq_table_df.sort_values(['wells', 'date'], inplace = True)

bq_table_df.reset_index(drop = True, inplace = True)

last_cumulative_production = bq_table_df[bq_table_df['date'] ==

bq_table_df['date'].unique().max()]['cumulative_production']

last_cumulative_production.reset_index(drop = True, inplace = True)

final_wells_df['cumulative_production'] = final_wells_df['oil'] + last_cumulative_production

return final_wells_df

else:

bq_table_df['wells'] = bq_table_df['wells'].astype(well_order)

bq_table_df.sort_values(['wells', 'date'], inplace = True)

bq_table_df.reset_index(drop = True, inplace = True)

previous_date = bq_table_df['date'].unique()[list(bq_table_df['date'].unique()).index(final_wells_df['date'].unique())-1]

previous_cumulative_production = bq_table_df[bq_table_df['date'] ==

previous_date]['cumulative_production']

previous_cumulative_production.reset_index(drop = True, inplace = True)

final_wells_df['cumulative_production'] = final_wells_df['oil'] + previous_cumulative_production

final_wells_df['wells'] = final_wells_df['wells'].astype(well_order)

final_wells_df.sort_values(['wells', 'date'], inplace = True)

index = bq_table_df[bq_table_df['date'] == final_wells_df.iloc[0]['date']].index

bq_table_df.drop(index, inplace = True)

bq_table_df = pd.concat([bq_table_df, final_wells_df], axis = 0)

bq_table_df.reset_index(drop = True, inplace = True)

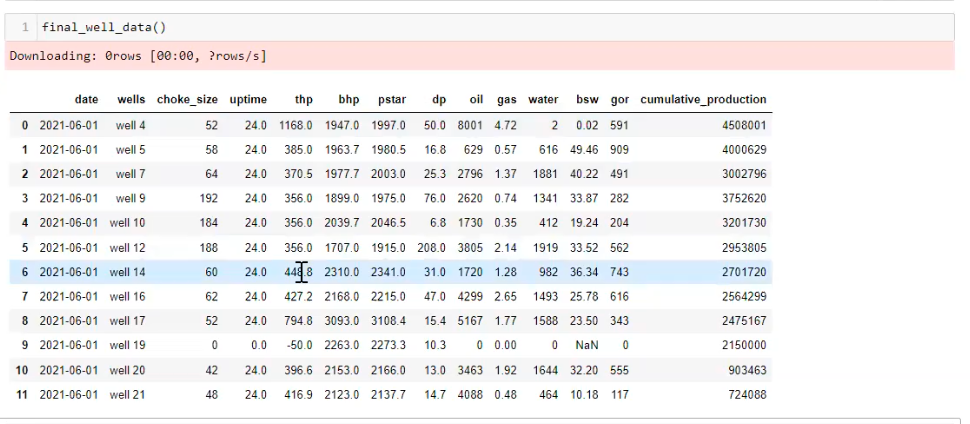

return bq_table_dfThe result when the function is called, returns the updated combined table with the cumulative production..

- Next, ingesting the final well data into the BigQuery table. There is need to first define the schema and this has to match what was created in BigQuery.

def bq_table_schema():

table_schema = [{'name':'date', 'type':'DATE'}, {'name':'wells', 'type':'STRING'},

{'name':'choke_size', 'type':'INTEGER'}, {'name':'uptime', 'type':'FLOAT'},

{'name':'thp', 'type':'FLOAT'}, {'name':'bhp', 'type':'FLOAT'},

{'name':'pstar', 'type':'FLOAT'}, {'name':'dp', 'type':'FLOAT'},

{'name':'oil', 'type':'INTEGER'}, {'name':'gas', 'type':'FLOAT'},

{'name':'water', 'type':'INTEGER'}, {'name':'bsw', 'type':'FLOAT'},

{'name':'gor', 'type':'FLOAT'}, {'name':'cumulative_production', 'type':'INTEGER'},

]

return table_schema- Next, save to BigQuery Table without repetitions to avoid redundant data in BigQuery

def bq_table_schema():

table_schema = [{'name':'date', 'type':'DATE'}, {'name':'wells', 'type':'STRING'},

{'name':'choke_size', 'type':'INTEGER'}, {'name':'uptime', 'type':'FLOAT'},

{'name':'thp', 'type':'FLOAT'}, {'name':'bhp', 'type':'FLOAT'},

{'name':'pstar', 'type':'FLOAT'}, {'name':'dp', 'type':'FLOAT'},

{'name':'oil', 'type':'INTEGER'}, {'name':'gas', 'type':'FLOAT'},

{'name':'water', 'type':'INTEGER'}, {'name':'bsw', 'type':'FLOAT'},

{'name':'gor', 'type':'FLOAT'}, {'name':'cumulative_production', 'type':'INTEGER'},

]

return table_schema- Then save the data and call the function to implement the action with the code below.

def save_to_bq_table():

bq_table_df = read_big_query_table()

final_wells_df = updated_wells_data()

if list(bq_table_df['wells'].unique()) == []:

return final_well_data().to_gbq('ingest_data.production data table', if_exists = 'append',

table_schema = bq_table_schema(), project_id = 'dummy-surveillance-project')

else:

if final_wells_df['date'].unique() not in bq_table_df['date'].unique():

return final_well_data().to_gbq('production_dataset.production data table', if_exists = 'append',

table_schema = bq_table_schema(), project_id = 'dummy-surveillance-project')

else:

return final_well_data().to_gbq('production_dataset.production data table', if_exists = 'replace',

table_schema = bq_table_schema(), project_id = 'dummy-surveillance-project')- Next, go back to GCP in the project created to view the data ingested using python in Jupyter Notebook.

Note: This ingested data can then be used to perform further surveillance analysis and visualization.

Conclusion

Creating an Oilfield Production Surveillance Dashboard using Google Cloud Platform (GCP) can provide valuable insights and analytics for managing oilfield production. With the steps outlined in this data digest, including setting up GCP, defining parameters for each well, building the dashboard, and implementing the project using Python and Jupyter Notebook, you can effectively monitor and analyze production data in real-time. This can enable oilfield operators to make data-driven decisions, optimize production processes, and maximize operational efficiency. Leveraging the power of GCP and modern data analytics techniques, oilfield operators can gain a competitive edge in the industry by unlocking valuable insights from their production data.

If you want to explore more on building your SQL skills, visit our learning path HERE

Also, if you want to get started with data analytics and looking to improving your skills, you can check out our Learning Track

Empowering individuals and businesses with the tools to harness data, drive innovation, and achieve excellence in a digital world.

2026Resagratia (a brand of Resa Data Solutions Ltd). All Rights Reserved.